Large language models have unlocked immense possibilities in natural language generation. However, in real-world use, especially in business, it is not enough for an LLM to produce coherent text—it must produce consistently formatted and machine-readable text. VLLM's structured decoding addresses this need by constraining output generation with explicit schema constraints to achieve consistency, ease of integration, and operational efficiency. This blog discusses the essential concepts, architectural breakthroughs and directions of structured decoding in vllm.

Understanding the Fundamentals of Structured Decoding

What is Structured Decoding?

Structured decoding is the mechanism through which an LLM's output is limited to adhering to some pre-specified schema or structure. Instead of employing free‑form text generation, structured decoding directs the model towards legal and predictable output, for instance, a well‑formed XML or JSON document. This is achieved through the incorporation of schema rules into the generation process.

With logit biasing and stateful parsing, the generation probabilities ensure that any token generated is from a valid output structure. The result is a model that understands natural language and "knows" the structure it must follow, making it most suitable for downstream tasks that require strict data structures.

Evolution from Traditional Methods

Historically, LLMs were trained using greedy or beam search to emphasise natural language fluency. These approaches effectively generate fluent text but sacrifice strict output forms. Early systems relied on post-processing to correct formatting errors, which was time-consuming and error-ridden.

Restrictions were ultimately integrated into the decoding process over time. The transition—post-processing to finite-state machine (FSM) constrained decoding and schema compilation—has offered a more powerful mechanism to guide LLM output.

Benefits for AI Application Developers

For programmers, the structured decoding of vllm has several key benefits:

-

Reliability: Outputs are always as specified in the schema, reducing errors in automated processes.

-

Ease of Integration: Cleanly formatted, regular outputs are readily consumable by APIS, databases, and other systems.

-

Performance Efficiency: While the imposition of structure carries a marginal computational cost, avoidance of excessive post-processing far outweighs it.

-

Less Debugging: Fewer structural bugs allow teams to spend more time on tuning core business logic instead of battling unexpected output problems.

These benefits together offer faster development cycles and stronger deployment into production environments, as observed in community posts on LinkedIn and other corporate sites

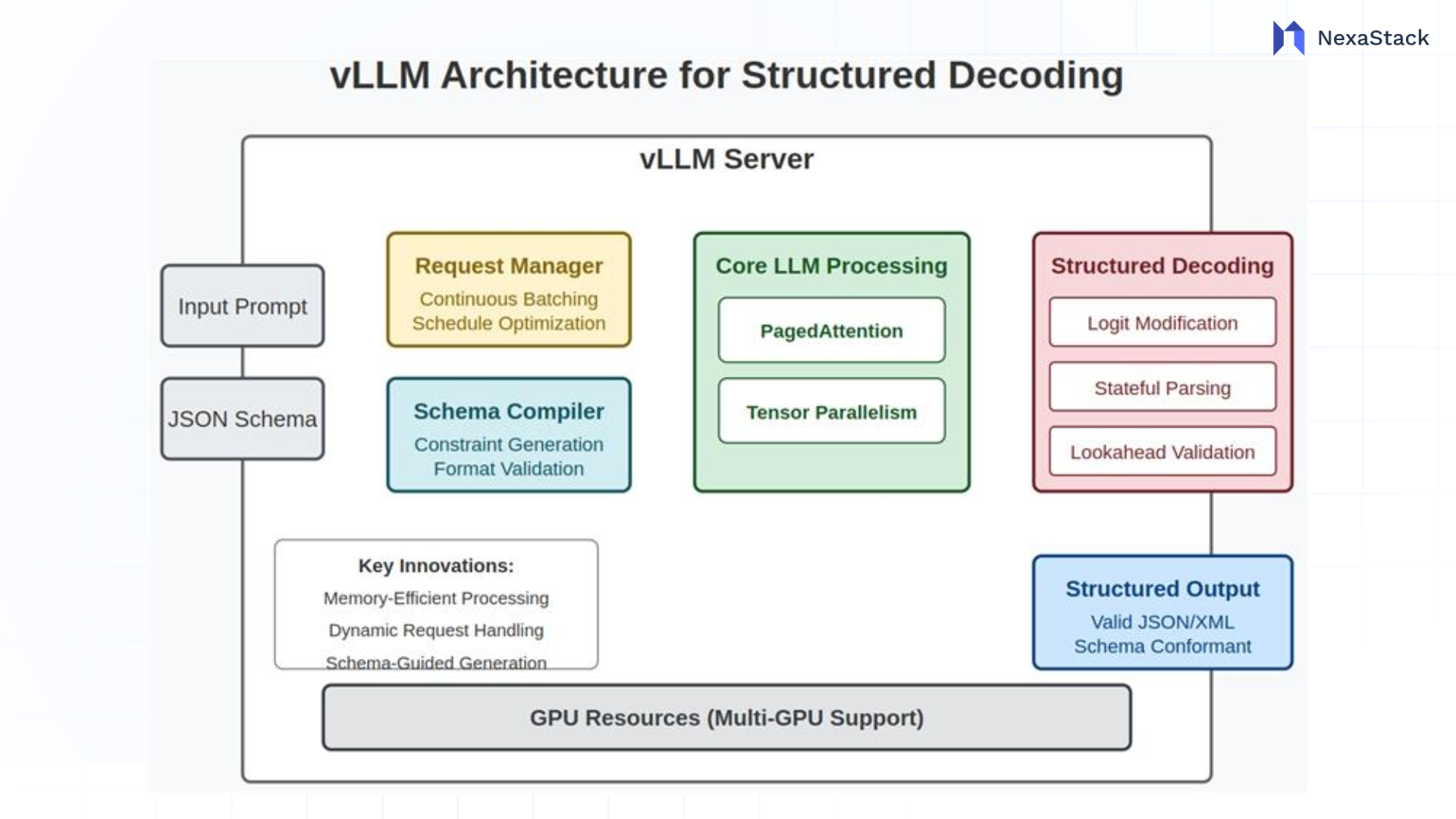

vLLM Architecture and Its Approach to Structured Outputs

vllm has been built from the ground up to maximise throughput and memory efficiency. Several architectural innovations support its structured decoding capabilities:

-

PagedAttention: Driven by principles of virtual memory, this method optimises key-value cache management for minimising memory fragmentation and enabling better handling of extended sequences. It is a base for maintaining high performance whenever structured outputs are placed.

-

Continuous Batching and Tensor Parallelism: vllm batches requests dynamically and parallelises computation across GPUS. This ensures high overall throughput even under additional structural constraints.

-

Integrated Decoding Layer: Unlike other frameworks where structured output is added as an extra layer onto a pre-existing one, vllm integrates it into the core decoding process. This integration ensures schema validation and output constraints are considered from the first token generated, leading to more reliable outputs.

As detailed in the vLLM V1 release notes, these design choices allow companies to achieve speed and accuracy in their AI systems.

Technical Foundation Enabling Structured Decoding

At the technical level, hierarchical decoding in vLLM is supported by a variety of interconnected mechanisms:

-

Logit Modification: In modifying token probabilities at generation, vLLM disfavors schema-violating outputs.

-

Stateful Parsing: The model keeps its state updated by following the output structure, guaranteeing that all generated text components correspond to the desired layout.

-

Schema Compilation: Schemas are precompiled to optimise forms consumed at token generation time with no overhead.

-

Lookahead Validation: In some instances where future tokens might invalidate the structure, the system employs lookahead methods to ensure validity at the time of output.

These foundations enable vLLM to generate structured output with little interference in the model's creativity, enabling a well-balanced approach well-suited in both research and production environments.

Implementing Structured Decoding in vLLM: Practical Considerations

Structured decoding implementation begins with the initial setup of vLLM to identify and enforce your desired output schema. This involves defining a schema that outlines the form needed and including it in vLLM's sampling parameters. The process has been streamlined enough so developers can readily go from idea to code.

Evidence from various sources shows that careful schema design and prompt instructions are key to success. For instance, a properly defined schema can help reduce errors and ensure that outputs are always valid.

Key Implementation Insights

-

Schema Compilation and Efficiency: vLLM compiles the schema into an internal representation to facilitate high-speed validation as token generation continues. Pre-compilation reduces run-time overheads and obtains optimal application of the constraints.

-

Dynamic Logit Biasing: The schema dynamically biases the model's probability distributions at generation time. In other words, tokens that are valid structures are favoured, and tokens that might invalidate the schema are disfavored.

-

State Management: Decoding is stateful—it always knows where it is in the structured output. This implies that each output part (e.g., an array or nested object) is built correctly.

-

Redundancy and Fallback: Wherever one structured decoding approach would fail or slow down the process, vLLM provides fallbacks to maintain overall system efficiency intact.

Experts for successful deployment suggest the following best practices:

-

Clear Prompting: Provide clear directions to the model regarding the desired structure to enhance output quality.

-

Iterative Refinement: Start with simple schemas and incrementally add complexity, monitoring performance and correctness at each step. Monitoring and Logging: Ensure strong monitoring to detect any divergence from the expected output format to enable quick troubleshooting.

-

Resource Optimisation: Use vllm's performance capabilities (e.g., caching and persistent batching) to optimise computational overhead.

These are echoed in both practitioner and academic literature, suggesting their applicability to effective and sound deployment.

Performance Analysis: Speed and Resource Optimisation

Experiments comparing structured and unstructured decoding reveal that although structured decoding has a small computational overhead, the payoff is extremely positive due to the reduction in errors and post-processing. Benchmarks exhibit valid output rate improvements and schema conformance, which are critical in enterprise environments.

Memory Usage Enhancements

Efficient memory management is the foundation of vLLM design. Techniques like zero-overhead prefix caching and improved key-value cache management (like in the PagedAttention mechanism) minimise memory fragmentation and redundant duplication. This helps achieve larger batch sizes and reduced operating costs, which are crucial for large-scale deployment.

Latency Minimisation for Production Workloads

End-to-end latency is improved by speeding up token creation and eliminating the need for heavy post-processing. Structured outputs reduce the cost of parsing and error handling in downstream systems, resulting in lower total response times. The overall result is a system that, while increasing structure enforcement complexity, is efficient in real-time production settings.

Enterprise Applications and Use Cases

Organised decoding in vllm is especially useful in a commercial environment, where proper data structures are most important for hassle-free integration and automation.

JSON and Structured Data Generation Software

From healthcare to finance, businesses rely on accurate, machine-readable data. Structured decoding enables it to happen:

-

API Response Generation: Reliable JSON responses that meet strict data contracts.

-

Database Record Creation: Direct record creation with structure for insertion into a database.

-

Automated Reporting: Generating standardized reports that analytics systems can automatically process.

-

Configuration Management: Creating formal configuration files to ensure system reliability and maintainability.

Integration with Downstream Systems and APIS

Structured outputs are directly proportional to how AI capabilities are integrated into a system. Input data being passed into business process automation software, microservices, ETL processes, and structured outputs reduces downstream parsing and cleaning time, hence speeding up system workflows. Structured decoding has been proven to improve data quality and processing time for large, complex ecosystems in most use cases within enterprises.

Integration with XGrammar

XGrammar provides a new approach to batch constrained decoding using pushdown automaton (PDA). You can think of a PDA as "a bag of FSMS, and each FSM is a context-free grammar (CFG)". One of the strengths of PDA is the recursion property of making several state changes. They also offer an additional optimisation (for the curious) to reduce grammar compilation overhead.

This innovation surmounts limitations by moving grammar compilation from Python to C, employing pthread.

Common Challenges and Troubleshooting Techniques

Schema Validation Error Handling

Schema validation errors are probably the most frequent problem. They most often stem from overly restrictive or incorrectly specified schemas. Prevention strategies include:

-

Evaluating Failed Outputs: Determining what parts of the output deviate from the schema.

-

Schema Refinement: Schema tuning to strike a balance between strict validation and the natural flexibility of language.

-

Graduated Strictness: Beginning with a less strict schema and increasingly stricter constraints as confidence in output quality grows.

Handling Complex Nested Structures

For deeply nested or extremely complicated schemas, consistency of output may be a problem. This can be solved by:

-

Chunking the Generation Process: Breaking down the structure into manageable, bite-sized pieces.

-

Progressive Generation: Constructing the intricate output stage by stage, each stage is subjected to validation tests.

-

Schema Modularisation: Using modular schemas that can be verified separately before being composed.

These means assist in sustaining both output and performance quality even on intricate applications.

Optimisation of Different Model Architectures

Different models can facilitate different levels of structured output creation. Developers may require:

-

Adjust Sampling Parameters: Shift temperature and other variables to favor consistency over variety.

-

Modify Prompt Instructions: Modify prompts to match the capabilities of specific model architectures.

-

Monitor and Compare Performance: Use A/B testing and benchmarking to discover how different models perform using structured decoding.

Community forums and scholarly articles emphasise that refining these parameters is essential to achieving the best performance on models of varied flavours.

Future Developments and Roadmap for Structured Decoding in vLLM

The future of structured decoding in vLLM looks bright. Visions of further improvements include:

-

Multi-format Support: Beyond JSON, include other formats such as XML and YAML.

-

Adaptive Constraint Relaxation: Employing smart ways to relax constraints during run-time when strict adherence is not feasible.

-

Schema Inference: Permitting the system to automatically infer a schema from a set of examples with minimal need for an explicit definition.

-

Streaming Structured Generation: Support for real‑time streaming of structured output, required for low‑latency use cases.

-

Custom Constraint Languages: Offering more expressive means for specifying and enforcing output constraints and for typical schema forms.

As industry standards evolve, vLLM's structured decoding will be able to integrate:

-

TypeScript and Openapi: Allowing developers to utilise Openapi schemas and TypeScript type definitions natively.

-

Function Calling Standards: Adhering to new function calling standards in LLM responses.

-

Semantic Validation: In addition to structural compliance, upcoming versions intend to provide semantic checks to ensure the correctness and suitability of the output.

Structured decoding in vLLM represents a critical milestone towards fully unlocking the potential of large language models in real-world applications. Not only does vLLM, with schema constraints embedded in decoding, produce sound, machine-understandable output, but it also fosters system-wide efficiency and integration. Structured decoding enables developers to craft high-performance, scalable AI systems that can tackle the high-stakes needs of today's businesses to create API responses, auto-reporting, or even advanced data extraction.

As the technology improves, with work from the community and future development on the horizon, the future for high‑performance, structured AI serving is bright. Implementing structured decoding is to bet on an architecture of creativity and accuracy, ushering in the future of next‑generation AI applications.

Next Steps with vLLM

Talk to our experts about implementing compound AI system, How Industries and different departments use Agentic Workflows and Decision Intelligence to Become Decision Centric. Utilizes AI to automate and optimize IT support and operations, improving efficiency and responsiveness.