Technology is transforming everything at a rate never witnessed before, and DeepSeek is leading the way with its open-source LLM. A remarkable AI company from China has developed DeepSeek, a large language model (LLM) known for its advanced natural language processing (NLP) skills for conversational AI, text analysis, and even code generation.

As of 11 March 2025, DeepSeek has become the most effective and impeccable model, especially for deep-seeking reasoning, for which it is known. DeepSeek is more economically efficient than Openai's O1 model for multi-dimensional reasoning tasks because it competes with the DeepSeek-R I extension.

Gaps in using leading AI technology still exist, and those most sensitive organisations concerned with data security issues are at risk. Organisations face severe problems like data leaks, breach of users’ privacy, and non-compliance with stringent industry rules, which makes this an issue that requires collective intelligence to solve. This is why NexaStack is here. NexaStack assists companies in deploying DeepSeek within private clouds and on-premise locally controlled servers, simplifying the entire process, which ensures the most optimal level of security, compliance, efficiency, and superlative sovereignty over the data.

This blog will elaborate on the methodologies for navigating and deploying AI through secure, private, and mobile lenses, Nexastack's deep-seeking capabilities, and the security concerns associated with AI deployment.

Understanding DeepSeek AI Models

Figure 1: Understanding DeepSeek AI Model

Figure 1: Understanding DeepSeek AI ModelWhat is DeepSeek and Why Does It Matter

DeepSeek became popular because of its open-source and enterprise-deployable license business model. Unlike Openai and Google’s LLMS, DeepSeek is under an MIT License, which allows commercial use without restraints. It is especially suitable for organisations wishing to implement AI while maintaining complete control of the infrastructure and data.

Key Features of DeepSeek:

-

Superior Performance: DeepSeek-R1 has demonstrated competitive or superior performance against Openai's o1 in benchmarks like MATH-500 (mathematical problem-solving) and LiveCodeBench (coding tasks).

-

Cost-Effective Training: DeepSeek was reportedly trained at a fraction of the cost of Openai’s models, around $6 million. While Openai has received significant funding, the exact training costs of its models remain undisclosed.

-

Optimised Reasoning and Coding: Deepseek produces strong results in mathematical and logical reasoning tasks, making it a powerful tool for research, automation, and enterprise applications.

Businesses do not need to spend on commercial LLMS because DeepSeek allows them to use conversational AI, automagic decision making, and sophisticated analytics.

The Need for Secure AI Model Deployment

It is not a simple matter of throwing it up and leaving; there is an extensive security perimeter to put in place to counter such dangers as:

-

Regulatory Non-Compliance: Businesses in the hands of finance, healthcare, and governmental functions are under strict policies such as GDPR, HIPAA, or ISO 27001.

Legacy cloud-based AI implementations pose vulnerabilities as they leave data on someone else's servers. This requires an on-premises, secure alternative such as NexaStack.

NexaStack: The Solution for Private DeepSeek Implementation

Everything from security to compliance and operational control must be considered as businesses increasingly adopt different forms of AI solutions. This is especially true when utilising large language models such as DeepSeek. Many public cloud AI systems tend to disregard sensitive data and privacy issues alongside necessary regulations, which can lead to vendor lock-in. This creates a demand for secure platforms that can be effectively deployed.

NexaStack addresses these issues through an on-premise private cloud delivery model for DeepSeek that safeguards data resident sovereignty while combining with enterprise systems and respecting GDPR, HIPAA, and ISO 27001.

Key Benefits of NexaStack for DeepSeek Deployment

Core Components of NexaStack

To these ends, assurances are provided that DeepSeek can be fully deployed without sacrificing security, performance, or regulatory compliance.

Why Enterprises Choose NexaStack

-

Flexible Deployment Options: Provides on-premises, private cloud, and air-gapped deployments for maximum security.

-

Optimised AI Performance: Auto-scaling, load balancing, and parallel processing deliver maximum DeepSeek deployment.

With DeepSeek on NexaStack, businesses can unleash AI's full power while retaining control, security, and compliance.

Step-by-Step DeepSeek Deployment Guide

Hardware Requirements and Optimisation

For optimal DeepSeek performance, businesses require:

Installation and Configuration Process

Performance Tuning for Enterprise Workloads

Following these processes will enable enterprises to achieve stringent security DeepSeek deployments with low latency and high throughput.

Ensuring Data Privacy and Security

End-to-End Encryption Implementation

DeepSeek on NexaStack applies AES-256 encryption for data at rest and uses TLS to transmit sensitive data securely. The addition of Hardware Security Modules (HSMS) improves the overall encryption.

Air-Gapped Deployment Options

NexaStack allows completely isolated air-gapped deployments for sensitive environments to block external attacks and unauthorised users. This option is most suitable for finance, healthcare and government sectors that deal with classified data.

Compliance with Industry Regulations

NexaStack adheres to:

These security measures make NexaStack an ideal choice for mission-critical AI deployments.

Advanced Features for Enterprise Users

-

Custom Model Fine-Tuning in Secure Environments

NexaStack allows enterprises to train and fine-tune DeepSeek models in isolated, high-security environments, ensuring data privacy, regulatory compliance, and protection from external threats without relying on third-party cloud platforms.

-

API Integration with Existing Infrastructure

With native API support and flexible connectors, NexaStack integrates with CRM, ERP, cloud storage systems, and other enterprise applications, enabling AI-driven automation, workflow optimisation, and data synchronisation across various business processes.

-

Multi-Tenant Access Controls

Organisations can implement granular role-based access controls (RBAC) and identity management, ensuring secure, multi-user AI deployments, strict data segregation, and user-specific access permissions while maintaining audit logs and compliance tracking.

As privacy-first AI solutions continue to gain traction, NexaStack is positioned to become the gold standard for secure enterprise AI deployment with cutting-edge security measures. Organisations aiming for a private, efficient, and regulation-compliant DeepSeek deployment can start with NexaStack today!

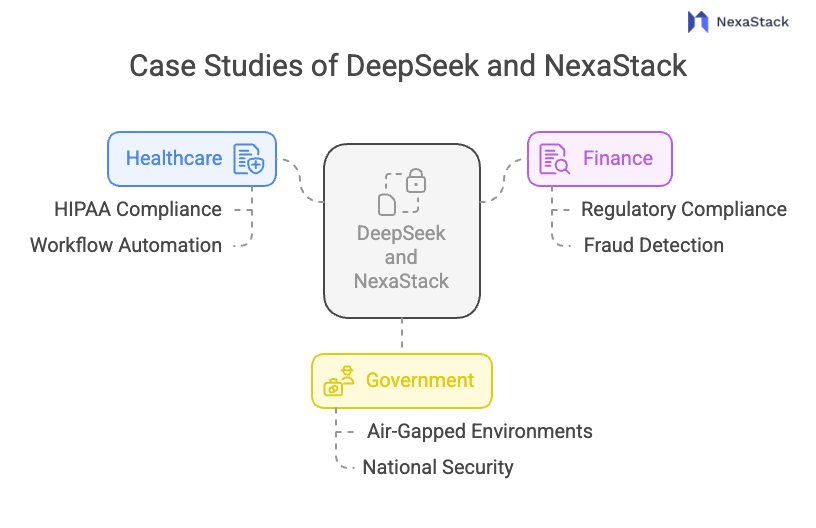

Fig 2: Case Studies of DeepSeek

Fig 2: Case Studies of DeepSeek  Figure 1: Understanding DeepSeek AI Model

Figure 1: Understanding DeepSeek AI Model