Architecture Overview

A self-hosted AI architecture with strong compute, storage, orchestration, and security elements provides scalable and reliable performance. The most important layers are:

-

Compute Layer: Powerful GPUs or TPUs for model training and inference. Multi-core CPUs can be used for smaller workloads.

-

Storage Layer: High-performance, horizontally scalable storage for data sets, model weights, and logs.

-

Orchestration Layer: Container tools such as Kubernetes or Docker Swarm orchestrate model deployment, scaling, and fault tolerance.

-

API Gateway: REST or gRPC endpoints allow smooth integration with enterprise applications, served through tools such as Triton Inference Server or FastAPI.

-

Monitoring and Logging: Prometheus and Grafana give real-time feedback on model performance, resource usage, and errors.

-

Security Layer: Firewalls, encryption, and identity management systems (such as Keycloak) safeguard data and models against unauthorised access or attacks.

This modular design provides high availability, scalability, and compatibility with existing enterprise infrastructure, allowing organizations to deploy AI solutions specific to their operational requirements.

Hardware and Software Requirements for AI Deployment

Hardware Requirements

The hardware stack for self-hosted AI needs to trade off between performance, scalability, and cost. The major components are:

Compute:

-

GPUs/TPUs: NVIDIA GPUs are best suited for inference of large models such as transformers. Google TPUs are another option for workloads.

-

CPUs: For smaller models or preprocessing, high-core-count CPUs offer affordable performance.

Memory:

- System RAM: Sufficient for multitasking and processing large datasets during inference.

- GPU VRAM: Adequate for inference of large language models (LLMs) or computer vision models.

Storage:

- Primary Storage: NVMe SSDs for quick access to model weights and datasets.

- Secondary Storage: HDDs or distributed storage for backups and archival.

Networking:

- High-Speed Interconnects: Gigabit Ethernet or InfiniBand for low-latency data transfer in multi-node clusters.

- Bandwidth: Sufficient for distributed inference on multiple GPUs.

Power and Cooling:

- UPS: Uninterruptible power supplies to provide uninterrupted operation.

- Cooling: Liquid or high-efficiency air cooling for GPU-intensive configurations to avoid thermal throttling.

Software Requirements

The software stack should be capable of supporting model development, deployment, and observation with enterprise IT system compatibility. The following components are crucial:

Operating System:

- Ubuntu Server 22.04 LTS or Red Hat Enterprise Linux (RHEL) 8 for stability, security patches, and enterprise-level support.

AI Frameworks:

- vLLM for efficient execution and serving of large language models

Containerization and Orchestration:

- Docker for packaging models and dependencies.

- Kubernetes is for orchestrating containerised workloads across clusters.

- Helm for managing Kubernetes configurations.

Model Serving:

- NVIDIA Triton Inference Server for multi-model serving with high throughput.

- KServe for serverless inference in Kubernetes environments.

- FastAPI for lightweight REST API deployments.

Monitoring and Observability:

-

Prometheus for metrics collection.

-

Grafana for visualisation of performance dashboards.

-

ELK Stack (Elasticsearch, Logstash, Kibana) for log aggregation and analysis.

CI/CD Pipelines:

-

GitLab CI/CD or Jenkins for automatic retraining and deployment of models.

-

ArgoCD for GitOps-style Kubernetes deployments.

Security Tools:

-

Keycloak for managing identities and access.

-

HashiCorp Vault for managing secrets.

-

Suricata for intrusion detection.

AI Implementation Strategy and Planning Guide

Stakeholder Alignment

Implementation needs to be done in collaboration across departments. Important steps are:

-

Identify Stakeholders: Involve IT, data science, compliance, security, and business unit leaders.

-

Define Use Cases: Prioritise high-impact use cases, such as supply chain optimisation, predictive maintenance, or customer sentiment analysis.

-

Set Success Criteria: Define KPIs, such as:

-

Model accuracy (e.g., >90% for classification tasks).

-

Inference latency (e.g., <100ms for real-time applications).

-

Cost reductions (e.g., 50% decrease in cloud costs).

-

Uptime (e.g., 99.9% availability).

-

Budget Planning: Project hardware costs, software licenses, and staff (data scientists, DevOps engineers).

Risk Mitigation Strategies

-

Skill Gaps: Employ AI experts or consultancies for preliminary configuration.

-

Hardware Delays: Have backup vendors or rent equipment temporarily.

-

Model Performance Issues: Implement several models (e.g., BERT versus Distil BERT) to test reliability.

-

Budget Overruns: Employ staged deployment to offset expenses over a period.

-

Compliance Risks: Engage the compliance and legal departments early for synchronisation with legislation.

Step-by-Step AI Deployment and Integration

Model Selection and Preparation

-

Model Selection: Choose pre-trained models from libraries like Hugging Face (e.g., BERT for NLP, YOLO for computer vision) or train application-specific models for applications.

-

Fine-Tuning: Fine-tune models using in-house data to achieve higher precision. For instance, fine-tune a model for sentiment analysis on customer ratings to identify domain-specific signals.

-

Validation: Validate test models for accuracy, stability, and boundary values with tools like MLflow or Weights & Biases.

Deployment Workflow

Containerization:

-

Package model, dependencies, and inference scripts into a Docker package.

-

Use multi-step Docker builds to minimize the image size (i.e., <2GB).

Orchestration:

-

Utilize containers with Kubernetes to scale and offer fault tolerance.

-

Scale-up Kubernetes deployments based on replicas (e.g., 3 replica deployments for HA).

-

Utilize Helm charts for reusable configurations.

API Configuration:

Exposing a model through REST or gRPC endpoints with Triton Inference Server or FastAPI.

Testing:

-

Perform load testing using tools such as Locust to replicate production traffic.

-

Validate model predictions against ground truth data.

-

Measure latency and throughput at various loads.

Integration:

- Integrate APIs into business applications (e.g., Salesforce, SAP) with middleware like Apache Kafka or RabbitMQ.

- Use retries and circuit breakers for resilience.

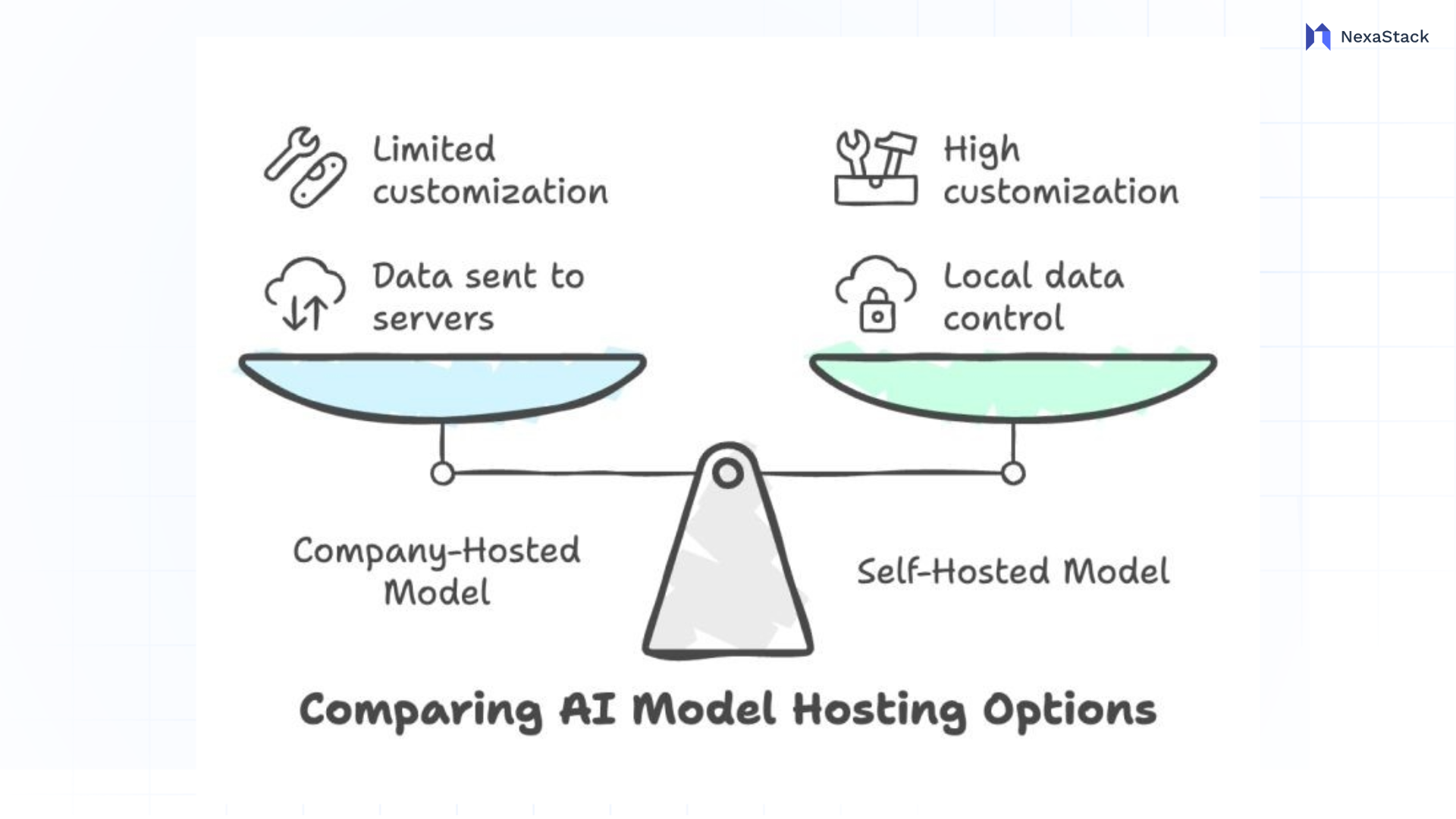

Figure 2: Comparing AI Model Hosting

Figure 2: Comparing AI Model HostingKey Performance Indicators and AI Success Metrics

Success Indicators

-

Accuracy: >90% for classification tasks, >85% for generative tasks.

-

Latency: Less than 100ms for real-time, less than 1s for batch processing.

-

Cost Savings: Save cloud costs by 50%–70% compared to SaaS offerings.

-

Uptime: Implement 99.9% uptime through robust monitoring and failover.

-

User Adoption: Obtain >80% user adoption of AI-driven features (i.e., utilization of a chatbot).

-

ROI: Positive ROI in 12–18 months after implementation.