Model ML Monitoring is the systematic process of observing, tracking, and analysing a machine learning model’s behaviour in production. It ensures that the model’s predictions remain accurate, fair, and aligned with business goals.

A model is exposed to constantly evolving data, environmental shifts, and system dynamics in production. Without continuous oversight, these changes can silently erode model performance and jeopardise business outcomes.

This is where Machine Learning Monitoring becomes indispensable. It acts as a safeguard, protecting the integrity of your ML models and the ROI of your AI initiatives.

Unlike traditional software, Machine learning models rely on patterns learned from historical data. However, real-world data is never static. New trends, user behaviour changes, market dynamics, and unexpected events (like a pandemic or economic shift) can cause significant deviations in data. These changes, if undetected, can lead to poor model performance, biased decisions, or even system failures.

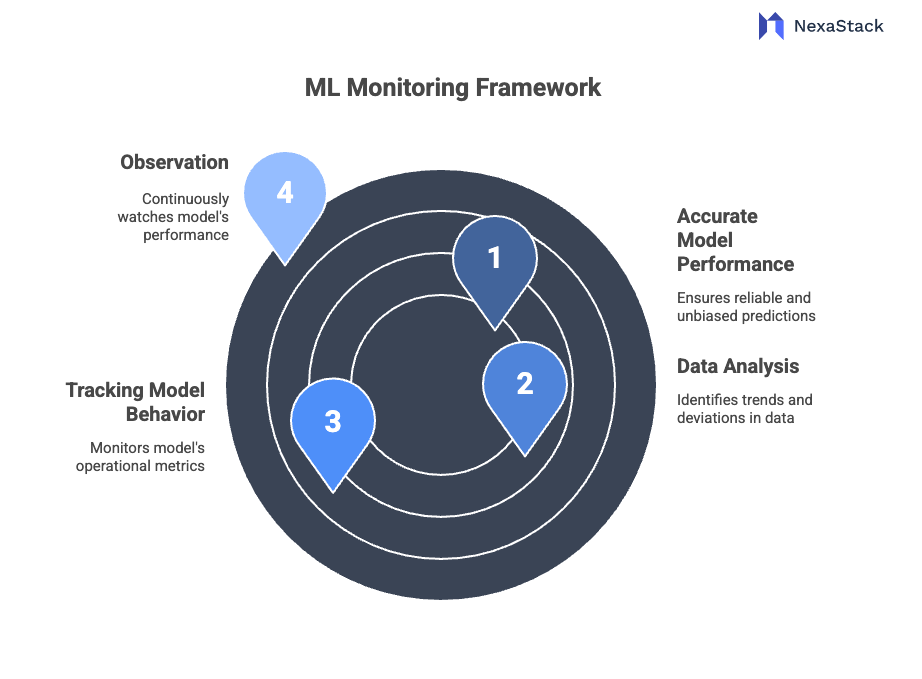

ML Monitoring encompasses:

-

Data Monitoring: Checks if input features are consistent with the training data.

-

Model Performance Monitoring: Assesses how well the model is predicting outcomes.

-

System Monitoring: Measures latency, uptime, throughput, and resource consumption.

-

Ethical Monitoring: Ensures fairness, accountability, and transparency in predictions.

By monitoring these aspects, data teams can quickly respond to anomalies, retrain models, and keep AI systems healthy.

Figure 1: ML Monitoring Framework

Figure 1: ML Monitoring FrameworkWhy is Monitoring Machine Learning Models Important?

1. Performance Degradation Is Inevitable

ML models degrade over time, especially when faced with shifting data. This decay, known as model drift, can cause accuracy to plummet if left unchecked. Monitoring allows early detection of such issues, avoiding catastrophic decision-making failures.

For instance, a model used in credit risk assessment might perform well during a stable economy but fail during a recession. Without monitoring, these failures might only be discovered after losses have occurred.

2. Changing Business Environment

Models are often built for a specific context. However, businesses grow, customer segments evolve, and regulations change. Monitoring ensures that the model adapts—or flags when it doesn’t—to the new environment.

3. Compliance and Fairness

Industries like finance, healthcare, and law require models to be fair and compliant with strict guidelines. Bias can creep in subtly through data drift. Continuous monitoring helps surface these issues early, ensuring transparency and audit-readiness.

4. Avoiding Technical Debt

Deploying models without setting up proper monitoring introduces ML-specific technical debt. Like DevOps monitoring catches backend server issues, ML monitoring detects silent prediction failures.

5. Ensuring ROI and Stakeholder Confidence

AI investments are justified only when they consistently deliver business value. Monitoring proves model effectiveness, ensures continued relevance, and builds trust among business stakeholders.

What Should You Monitor?

1. Model Performance Metrics

Tracking standard evaluation metrics is essential:

-

Classification Models: Accuracy, Precision, Recall, F1-score, ROC-AUC

-

Regression Models: RMSE, MAE, R-squared

However, these metrics are meaningful only when real labels (ground truth) are available. In many real-world cases, labels are delayed. In such scenarios, proxy metrics (like user engagement or click-through rates) can be early indicators.

2. Input Data Quality

Garbage in, garbage out. Even the best-trained model cannot function with poor data. Monitor for:

-

Missing values

-

Null entries

-

Unexpected data types

-

Outliers

-

Categorical levels not seen during training

Example: A fraud detection model may malfunction if a new transaction type isn’t recognised due to missing categorical handling.

3. Data Drift and Concept Drift

-

Data Drift: Occurs when input feature distributions change (e.g., more users from a new region).

-

Concept Drift: Happens when the relationship between input and output changes (e.g., customers respond differently to marketing due to economic downturn).

Monitoring tools compare real-time data distribution to training data and flag significant deviations using metrics like:

-

Population Stability Index (PSI)

-

Kullback-Leibler divergence

-

Kolmogorov-Smirnov test

4. Prediction Drift

Even if inputs remain stable, a shift in predicted outcomes (prediction drift) can indicate issues. For example:

-

A classification model suddenly predicts 95% of outputs as a single class.

-

Regression outputs fluctuate abnormally without any input change.

Monitoring the distribution of outputs is essential to catch such issues.

5. System Health Metrics

Technical reliability affects model usability:

-

Latency: Is inference fast enough?

-

Throughput: Can the system scale with demand?

-

Error Rates: Are there runtime errors or unexpected crashes?

Combining model metrics with system observability gives a holistic picture of ML performance.

How to Monitor Machine Learning Models

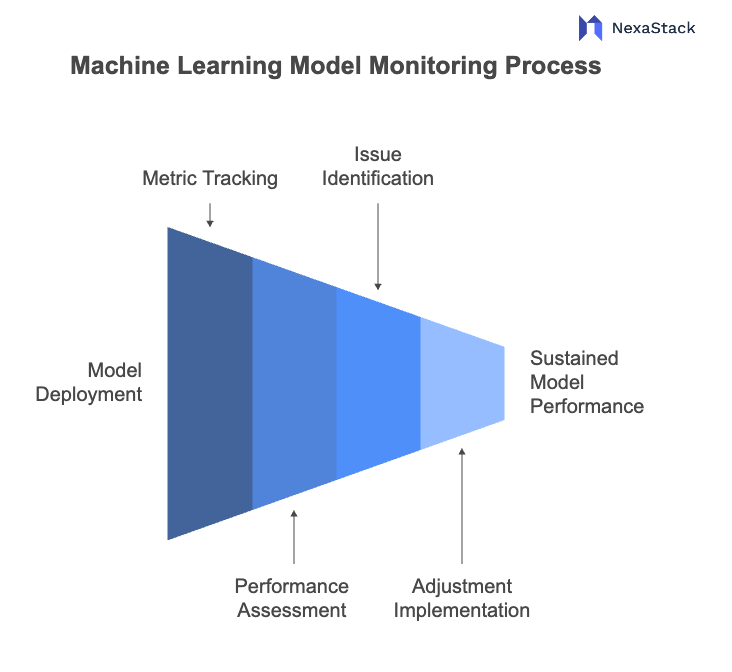

Figure 2: Machine Learning Model Monitoring Process

Figure 2: Machine Learning Model Monitoring ProcessSetting up ML monitoring requires thoughtful integration of infrastructure, tools, and processes. Here’s how to implement it effectively:

1. Establish Baselines

Calculate baseline metrics from training, validation, and test data before deployment. These serve as reference points for comparing post-deployment performance and data.

Baseline examples:

-

Feature: mean, median, mode

-

Class distribution

-

Model accuracy on validation data

2. Automate Logging Pipelines

Logs must include:

-

Raw input features

-

Timestamps

-

Model version

-

Prediction outputs

-

Confidence scores

Ensure logs are structured, secure, and queryable via a centralised log management system.

3. Enable Drift Detection Tools

Implement drift detection mechanisms using:

-

Statistical tests (e.g., KS test, PSI)

-

ML-specific drift detection libraries (Evidently AI, Alibi Detect)

Set thresholds for acceptable variance, and trigger alerts when they’re crossed.

4. Track Metrics in Real-Time Dashboards

Use dashboards to visualise trends, detect anomalies, and compare current vs. baseline data. Tools like Grafana, Kibana, or dedicated ML monitoring platforms can be integrated with Prometheus or custom APIs.

5. Alerting and Escalation

Automate alert generation when anomalies or threshold breaches are detected:

-

Sudden accuracy drop

-

Increased latency

-

Feature drift

Implement escalation paths—alerts can notify data scientists, DevOps teams, or trigger automated retraining pipelines.

6. A/B Testing and Shadow Deployments

Before pushing updated models, use:

-

A/B testing: Compare model versions in production.

-

Shadow deployment: Run a new model silently alongside the old one without affecting live decisions. Compare their predictions to evaluate performance.

When Should You Monitor Models?

ML monitoring is a continuous process, but some key moments include:

1. Initial Deployment

This is when unexpected bugs or performance issues are most likely to occur. Intensive monitoring during the first few days or weeks is critical.

2. Regular Intervals

Depending on business sensitivity, implement a schedule for weekly, bi-weekly, or monthly checks. For high-stakes applications, real-time monitoring is preferred.

3. Trigger-Based Events

Monitor intensively when:

-

New data sources are integrated

-

Major business or seasonal events occur

-

Customer behaviour shifts (e.g., post-campaign or crisis)

-

A model is retrained

ML Monitoring in Practice: Use Cases

Retail & E-Commerce

Monitoring helps identify:

-

Shifts in purchasing behaviour

-

Changes in product trends

-

Regional sales variations

A model that suggests “winter jackets” during summer due to outdated data is a classic drift issue.

Healthcare

Patient data can change with demographics or disease patterns. Monitoring ensures diagnostic models remain accurate and ethical across populations.

Banking and FinTech

Credit scoring models require drift detection to remain fair and compliant. A drift in income distribution can lead to biased approvals.

ML Monitoring Tools to Consider

Evidently AI

Open-source tool for tracking data quality, performance metrics, and drift detection. Integrates easily into CI/CD pipelines.

WhyLabs

It provides automated anomaly detection and alerts designed for large-scale ML systems.

Fiddler AI

It focuses on explainability and performance tracking and is ideal for regulated industries.

Arize AI

Real-time observability for model predictions, helping with performance degradation and retraining signals.

Prometheus + Grafana

Standard DevOps tools that can be adapted for system and metric monitoring in ML workflows.

Best Practices for Effective ML Monitoring

Design Monitoring from the Start: Make it part of your ML lifecycle, not an afterthought.

Automate Everything: Logging, drift detection, performance tracking—build CI/CD-style pipelines.

Treat Models as Products: Version them, test them, monitor them.

Use Explainability Tools: Understand why a model failed, not just when it failed.

Collaborate Across Teams: ML engineers, data scientists, DevOps, and compliance teams should co-own monitoring.

Conclusion: Monitoring is Your AI Insurance Policy

Building and deploying machine learning models is no longer the most challenging part of AI adoption—maintaining them is. As models face real-world uncertainty, ML monitoring becomes the insurance policy that protects your AI investment. It ensures your systems stay reliable, performant, and aligned with business and ethical goals.

Ignoring ML monitoring means risking silent failures that erode trust, cause financial loss, and invite regulatory penalties. Embracing it means setting up your AI systems to succeed and sustain success.

Protect your AI investment—monitor your models like your business depends on it. Because it does.

Next Steps Towards ML Monitoring

Talk to our experts about implementing compound AI system, How Industries and different departments use Agentic Workflows and Decision Intelligence to Become Decision Centric. Utilizes AI to automate and optimize IT support and operations, improving efficiency and responsiveness.