As enterprises adopt AI-driven solutions to enhance productivity and streamline workflows, the need for secure, scalable, and compliant deployment architectures becomes critical. While public AI models offer convenience, they raise significant concerns around data privacy, latency, and control, especially in regulated or high-security environments.

Nexastack provides a platform engineered for deploying Private AI Assistants within isolated environments—whether on-premises, in air-gapped data centres, or across hybrid cloud infrastructures. By integrating advanced MLOps, LLMOps, and agent orchestration capabilities, Nexastack empowers engineering teams to:

-

Fine-tune foundation models with domain-specific data

-

Deploy containerised AI agents with resource-level control

-

Ensure compliance with security and governance policies

-

Monitor performance, drift, and usage across the model lifecycle

This blog details the architecture, deployment workflow, and integration strategies for building a private AI assistant using Nexastack. It enables technical teams to implement intelligent automation while preserving full ownership of their data and IP.

Business Case & Value Proposition

Implementing a private AI assistant uniquely addresses intrinsic enterprise issues related to data privacy, operational efficiency, and tailored functionalities. While cloud AI solutions are ideal for certain network situations, a private AI assistant protects your sensitive data, ensuring it stays within organisational walls and meets compliance regulations such as GDPR and HIPAA. Moreover, Nexastack adds even more value as it automates the entire auto-scaling linkage, devoting less time to setup and saving on configuration drift as resources scale.

The value proposition includes:

-

Better Privacy - The ability to maintain proprietary data on premises or within a private cloud, reducing opportunities for external security breaches.

-

Customisation - The ability to customise AI models to their sector needs, such as healthcare diagnostics or financial forecasting.

-

Cost-efficiency - Customising AI resources, scaling using Nexastack eliminates wasted operational costs.

-

Operational Control - Full observability of AI behaviour, infrastructure, and overall umbrella, enabling faster identification and responses to issues.

For instance, a manufacturing company can deploy a private AI assistant to predict equipment failures based on sensor-level data, improving uptime and allowing for decision-making without hindering data protection. Nexastack's Infrastructure as Code capabilities streamline the entire process with all the necessary security and compliance configurations for scalability.

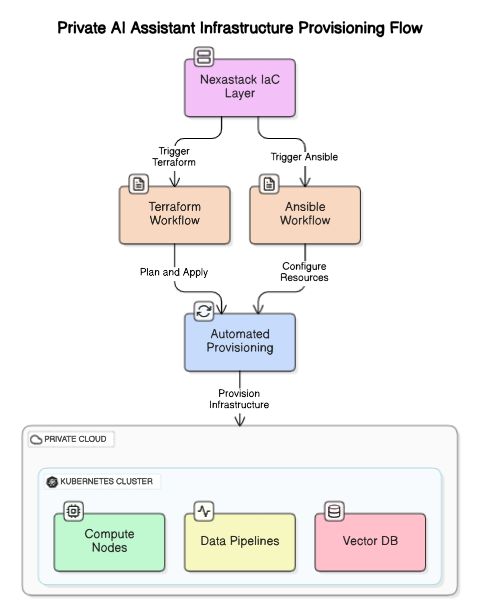

Infrastructure Setup

To set up a private AI assistant infrastructure, you will want to establish a scalable, secure, and robust environment. Nexastack's cloud-native environment can be deployed in multiple cloud environments. Terraform, Ansible, and Kubernetes can all be integrated to provide automation and scalability.

Infrastructure Set Up Steps:

-

Identify Requirements: The requirements of the infrastructure should be defined by determining the computational requirements (e.g. GPU computing required, etc) and the appropriate storage for whatever pipelines will be established.

-

Select Deployment Model: Depending on the business needs, on-premises, private cloud, or hybrid deployment will likely be chosen based on compliance and latency needs.

-

Configure Nexastack: Use Nexastack IaC (Infrastructure as Code) templates to create the necessary Kubernetes clusters, vector databases, APIs, etc. Nexastack Git repositories will provide auditable workflows to ensure compliance for operational data models.

-

Provision Compute Environment: Select and provision the appropriate nodes configured for GPU optimized tasks for inferences. For SLAs Nexastack can automate allocation of computational resources via orchestration using the Kubernetes standard, to manage the complexity of the workload operational efficiency within the distributed computing capabilities in public or private clouds.

-

Develop Data Pipelines: Develop both streaming and batch pipelines where data needs to be ingested from sources. Make sure to clarify the additional requirements for the Secret sources of data exist in your enterprise data.

Nexastack's singular inference platform merges the Kubernetes-native management interface and ease-of-use interface with AI observability, allowing for consistent resource scalability to meet the hybrid requirement.

Figure 1: Infrastructure Setup

Figure 1: Infrastructure Setup Security & Privacy Framework

Security and privacy will naturally be paramount to any private AI deployments. This is especially true when deploying in highly regulated industries. Nexastack has built-in controls for enterprise-grade security.

Key Security Controls:

-

Isolated Compute Environments (Sandboxed Environments): Nexastack allows you to deploy sandboxed environments, with strict access controls, which eliminates the risk of unauthorised access.

-

Encryption (Data in-Transit / Data at-rest): Using AES-256 encryption standards for data. Encryption is implemented in the data pipelines using Nexastack's policy engine.

-

Guardrails (Rules): Defining customizable rules would prevent sensitive data from being included in AI outputs while minimising the risks of leaking sensitive information.

-

Auditability (Logging Controls): Nexastack provides audit logs for changes to deployed infrastructure components and usage of environments. Reporting on audit logs will help facilitate compliance with standards such as FISMA.

Privacy Framework:

-

AI models should be deployed in a private cloud without the risk of taking data outside your organisational boundaries.

-

Nexastack allows the enforcement of data residency and data access policies as code to ensure data compliance.

-

Audit user interaction (e.g., logging) frequently to detect anomalous usage using observing tools as part of a QA framework.

For example, a healthcare provider can deploy an AI assistant to quickly evaluate patient data and plan doctor leads' actions on these patients. The data pipelines would ensure HIPAA compliance with encrypted pipelines and auditable logs to keep track of who is interacting with data and how the data is being used.

Model Integration

After acquiring the AI models, the next step is to integrate them into the infrastructure. Nexastack supports large language models (LLMs) and generative AI, making this process smooth.

Integration Process:

-

Model Selection: Based on the use case (e.g., to build a chatbot or for predictive analytics), select a model such as Llama, Gemma, or any custom-trained LLM.

-

Optimization: Nexastack provides inference pipelines, so that you can optimize to load balance the model easily while maintaining the most efficient way for LLMs, specifically in resource-limited environments.

-

Deployment: Use Nexastack's orchestration to deploy models for high availability across Kubernetes clusters.

Testing: Use Nexastack's AI evaluation agent to measure the performance of the model, to evaluate the accuracy of the output, and the latency.

Nexastack's support for various modular architectures allows users to swap models in the infrastructure without changing the infrastructure to help improve flexibility.

Enterprise Systems Connection

Connecting the AI assistant to enterprise systems guarantees that the AI assistant is capitalizing on any existing data and workflows. The integration capabilities of Nexastack reduce friction in this process.

Connection Method strategies:

-

APIs: Use Nexastack to create RESTful APIs for connecting to existing CRM, ERP, data warehouses, etc.

-

Microservices: Deploy the AI as a microservice that is integrated into Nexastack iPaaS (integrated platform as a service) for seamless data movement across enterprise applications.

-

Data sources: Connect to databases, data lakes, or real-time data streams using Nexastack connectors.

-

Legacy systems: Leverage Nexastack's cloud-native tools for modernizing legacy systems that will result in reduced technical debt.

For instance, a retail company can connect the AI assistant to an ERP system, which will allow the AI assistant to provide intelligence about the inventory in real time. The connection would use Nexastack's API management to be secure.

Performance Optimization

Performance optimisation is an important part of ensuring that the AI assistant provides accurate response times at low latencies. Nexastack has a number of adaptive scaling and observability tools that are critical to this task.

Strategies for optimization:

-

Roles: Nexastack can adjust the compute dynamically to allocate depending on the complexity of the query that the AI assistant receives.

-

Inference Pipelines: Take advantage of Nexastack’s specialized pipelines designed specifically for AI workloads that require high operate efficiency, reducing latency.

-

Monitoring: Use the observability layer of Nexastack to monitor health and live metrics on performance.

-

Load Distribution: Leveraging clusters through Nexastack’s orchestration can evenly distribute workloads avoiding bottlenecks.

An example of this would be for a financial institution to be able to optimise its AI assistant for real-time fraud detection, needing a sub-second response while using Nexastack’s GPU time slicing.

Governance & Compliance

Governance is about responsible AI use and being on the hook for regulatory compliance. Nexastack’s Governance as a Code (Gaac) framework will automate compliance processes.

Governance Framework:

-

Policy Management - define and enforce ethical use policies through Nexastack’s policy engine.

-

Compliance Checks—The Nexastack continuous compliance tools help automate pre-deployment audits to ensure compliance with most regulations (GDPR, HIPAA, NIST AI RMF).

-

Risk Assessment - You can ask Nexastack’s AI evaluation agent to check your data and help you to mitigate bias or operational risk.

-

Audit Trails - you can log every action for AI and any other related changes to your infrastructure for regulatory reporting.

Nexastack’s Gaac reduces manual governance overhead so organisations can be agile while meeting needed compliance.

Support & Scaling

Ongoing support and scaling are essential to ensure the AI assistant is still up and running and able to adapt to ongoing changes. Nexastack’s automation takes the effort out of support and scaling.

Support Solutions:

-

Updates—As needed, updates and patches can be deployed with no downtime, thanks to Nexastack's rolling update capability.

-

Monitoring—Nexastack's sentinel agent can send alerts when risks are detected, and action can be taken to manage risks automatically.

-

Support team—A support team can respond to incidents, and Nexastack's insight event management facility can also be used to action incidents.

Scaling Solutions:

-

Horizontal Scaling- As demand grows, nodes can be added to Kubernetes clusters, managed by Nexastack’s orchestration.

-

Vertical Scaling—We can upgrade or scale up resource allocation using Nexastack’s scaling tools for workloads requiring even more computational power.

-

Multi-Cloud—Nexastack's support for multi-cloud allows workloads to be safely allocated to ensure redundancy across AWS, Azure, or GCP.

For example, during peak seasons, a logistics company should scale their AI assistant to deal with the increase in demand by automatically provisioning additional resources on Nexastack.

Conclusion of Deploying a Private AI Assistant

Using a private AI assistant with Nexastack enables leveraging AI in an enterprise, with security, compliance and performance considerations. By automating infrastructure management, robust governance, and integration, Nexastack makes it easier to get from idea or concept to production. Each step in the process-business case, set up, security, integration, connectivity, optimisation, governance and maintenance, can be mapped out for overall success. With Nexastack's cloud-native capabilities, organisations can deploy trusted, scalable AI assistants that meet an organisation's specific needs.

Next Steps with Private AI Assistant

Talk to our experts about implementing compound AI system, How Industries and different departments use Agentic Workflows and Decision Intelligence to Become Decision Centric. Utilizes AI to automate and optimize IT support and operations, improving efficiency and responsiveness.