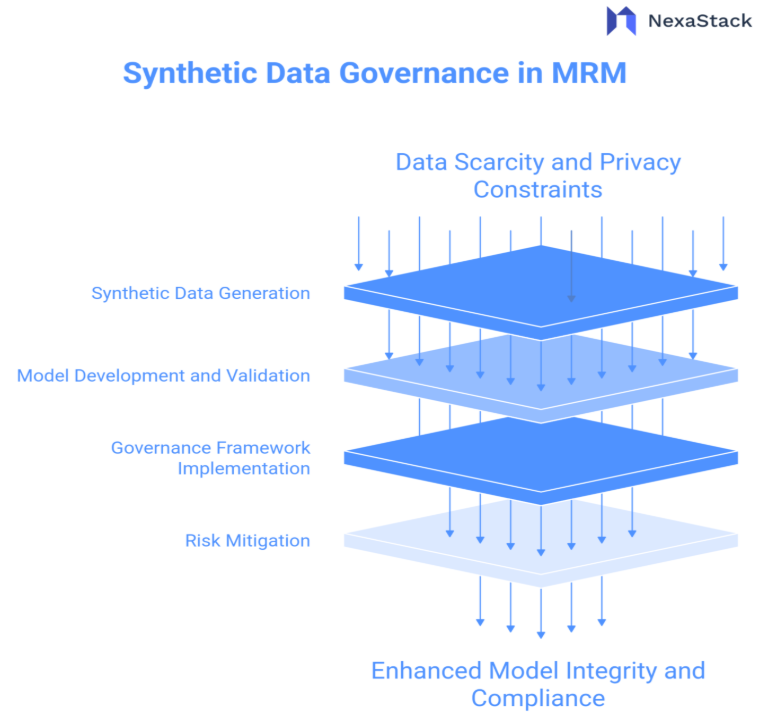

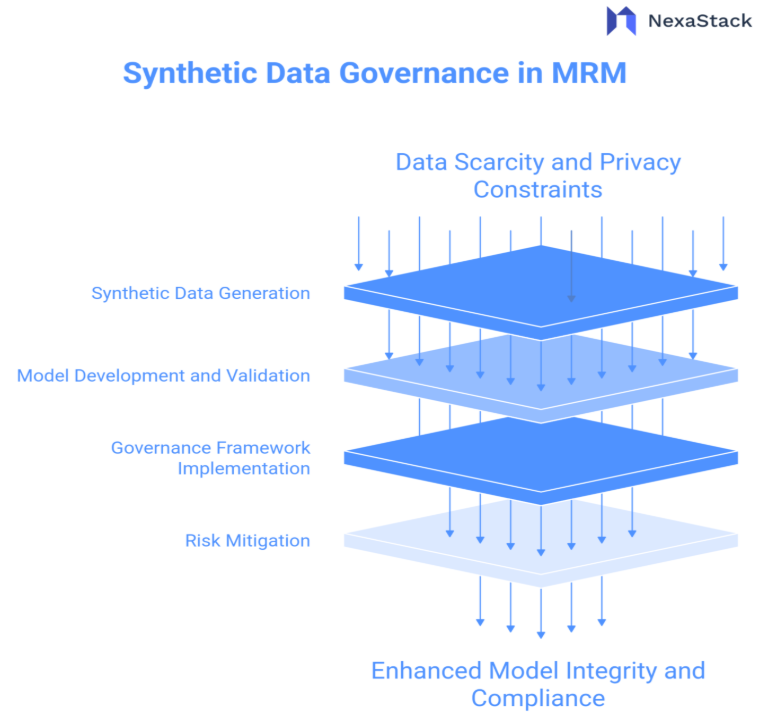

Synthetic data has emerged as a transformative solution to these challenges, offering new model development and validation pathways. Financial institutions can create sophisticated artificial datasets that mimic real-world patterns without client information by leveraging advanced generation techniques—including statistical methods, generative AI algorithms such as Generative Adversarial Networks (GANs), and computational simulations. This synthetic approach enables several critical MRM enhancements: the ability to conduct comprehensive stress testing under extreme economic scenarios without historical precedent; the capacity to mitigate dataset biases through carefully calibrated data generation; and the acceleration of model development cycles by eliminating privacy-related constraints.

Nevertheless, the implementation of synthetic data introduces its complex governance challenges. Without proper oversight and validation frameworks, synthetic data may produce misleading results, potentially amplifying model risk rather than mitigating it. The flexibility that makes synthetic data valuable also creates vulnerabilities, including the risk of creating data that fails to capture critical real-world dynamics or introduces new forms of bias. Financial institutions must therefore develop robust governance frameworks that ensure synthetic data applications enhance rather than compromise MRM objectives, balancing innovation with appropriate safeguards to maintain model integrity and regulatory compliance.

Fig: Synthetic Data Governance in MRM

Synthetic Data in Model Simulation

Synthetic data plays a crucial role in enhancing model simulation by addressing key limitations of traditional approaches. Below, we explore its applications in model validation, stress testing, and overcoming data scarcity in greater depth.

Enhancing Model Validation

Limitations of Traditional Validation

Traditional model validation relies heavily on historical datasets, which often fail to capture:

How Synthetic Data Improves Validation

Scenario Expansion

Adversarial Testing

Bias and Fairness Testing

Stress Testing and Sensitivity Analysis

Regulatory Requirements

Financial institutions must comply with stress testing mandates (e.g., Basel III, CCAR, ECB stress tests). However, real-world data often lacks extreme scenarios.

How Synthetic Data Enhances Stress Testing

Tail-Risk Scenario Generation

-

Synthetic data can model low-probability, high-impact events (e.g., hyperinflation, sovereign defaults, cyber warfare).

Sensitivity Analysis

Key Challenge: Realism vs. Plausibility

Overcoming Data Scarcity in AI/ML Models

The Data Hunger Problem

AI/ML models (e.g., fraud detection, credit scoring) require massive datasets, but real-world data is often:

- Limited (e.g., few observed fraud cases)

- Imbalanced (e.g., 99% non-fraud vs. 1% fraud transactions)

- Restricted (e.g., GDPR limits on personal data usage)

How Synthetic Data Helps

Data Augmentation

Class Balancing

Privacy-Preserving Training

Key Risk: Overfitting to Synthetic Artefacts

Fig: Synthetic Data Cycle in Model Simulation

Fig: Synthetic Data Cycle in Model Simulation Building a Synthetic Data Blueprint for MRM

A structured approach ensures synthetic data’s reliability and compliance.

Data Generation Techniques

|

Method

|

Use Case

|

Pros

|

Cons

|

|

Statistical Sampling

|

Credit risk modelling

|

Simple, interpretable

|

Limited complexity

|

|

Generative AI (GANs, VAEs)

|

Fraud detection

|

High realism

|

Computationally intensive

|

|

Agent-Based Modelling

|

Market simulations

|

Captures interactions

|

Requires domain expertise

|

Validation Framework

Synthetic data must undergo:

Governance and Documentation

Compliance Frameworks and Regulatory Challenges

Alignment with Existing Regulations

|

Regulation

|

Synthetic Data Consideration

|

|

GDPR

|

Anonymisation must be irreversible

|

|

CCPA

|

Synthetic data is not considered personal if non-inferential

|

|

Basel III

|

Synthetic scenarios must be justified

|

Model Risk Governance

Ethical and Bias Risks

- Synthetic data can amplify biases if the source data is skewed.

Mitigation:

Case Studies: Problem, Solution, and Impact

Case Study 1: Credit Risk Modelling for Rare Economic Shocks

Problem:

A multinational bank struggled to validate its credit risk models for extreme recession scenarios due to insufficient historical data. Traditional backtesting failed to capture potential black swan events, leaving the bank vulnerable to unexpected losses.

Solution:

The bank implemented a synthetic data generation framework using:

Impact:

Case Study 2: Fraud Detection System for a Digital Bank

Problem:

A neobank's AI fraud detection system had high false negatives because:

Solution:

The bank deployed:

Impact:

Future Trends and Recommendations

As synthetic data adoption grows in Model Risk Management (MRM), financial institutions must stay ahead of emerging trends while addressing implementation challenges. Below, we explore key developments and actionable recommendations.

Regulatory Sandboxes for Synthetic Data Testing

Current Landscape:

-

Regulators (e.g., FCA, MAS, ECB) are establishing "sandbox" environments where firms can test synthetic data applications under supervision.

Recommendations:

-

Proactive Engagement – Participate in regulator-led sandboxes to shape future policies.

Documentation Standards – Maintain detailed records of synthetic data methodologies for audit trails.

Risk Mitigation Plans – Prepare fallback procedures if synthetic data fails validation.

-

Impact: Accelerates approval timelines while ensuring compliance.

Fig: Synthetic Data Cycle in Model Simulation

Fig: Synthetic Data Cycle in Model Simulation