Portable AI empowers large language models (LLMs) to operate seamlessly across heterogeneous environments—public clouds, private data centres, edge devices, and offline contexts—without cumbersome rewrites or performance loss. At its core, portability rests on cloud-neutral design: an architecture philosophy where AI systems remain agnostic to any single provider. This ensures freedom from vendor lock-in, cost control, and adaptability amid shifting regulatory, technical, or geopolitical landscapes.

Key enablers of portability include standardised interfaces, model-agnostic pipelines, and execution environments such as WebAssembly (WASM). WASM facilitates near-native performance with sandboxed security across browsers, servers, and edge platforms, enabling true “build once, run anywhere” capabilities for GenAI workloads. Similarly, adopting model-neutral abstractions—like swapping model implementations and using standardised data formats—enhances adaptability across evolving AI frameworks.

By designing for portability from the outset, organisations can deploy LLMs wherever they need—whether to meet regulatory demands, deliver low-latency edge inference, or optimise costs across clouds. Cloud-neutral AI architecture future-proofs AI applications and strengthens resilience, efficiency, and flexibility—transforming how and where models work, from data centres to on-premise deployments and mobile environments.

Key Insights

Making AI Portable with cloud neutral design ensures flexibility, scalability, and resilience by running large models across environments

Seamless Deployment

Run large models on any cloud or premises without vendor lock-in.

Scalability Anywhere

Dynamically scale workloads across hybrid and multi-cloud infrastructures.

Resilience and Continuity

Maintain uninterrupted AI performance by reducing dependency on a single provider.

Cost Optimization

Balance workloads across environments for efficient resource utilisation and savings.

What Is Cloud-Neutral AI Design?

Cloud-neutral AI design is an approach to building and deploying AI systems independent of any cloud provider’s platform or proprietary technology. It seeks to remove the differences between cloud environments so workloads, including LLMs, can seamlessly run across diverse infrastructures.

This design philosophy uses open standards, containerization, orchestration, and abstraction layers to decouple AI workloads from cloud-specific constraints. This allows organisations to avoid vendor lock-in and leverage the best infrastructure for their needs, whether driven by cost, performance, security, or regulatory compliance.

Challenges of Running LLMs Across Environments

Running LLMs portably comes with multiple challenges:

-

Resource Intensity and Complexity: LLMs require powerful GPUs/TPUs, specialised drivers, and compatible software stacks, which vary by hardware vendor, complicating environment parity.

-

Dependency Conflicts: Different models require conflicting libraries and dependencies, often making it difficult to standardise environments.

-

Network and Latency: Deploying LLMs near users (edge, on-premises) to reduce latency can be complex outside cloud-native ecosystems.

-

Security and Compliance: Data privacy laws and security mandates may restrict cloud usage or mandate data residency, limiting cloud deployment options.

-

Operational Overhead: Managing updates, scaling, and monitoring across heterogeneous platforms adds complexity.

Overcoming these requires unified architectures and tooling that operate across diverse infrastructures while simplifying AI practitioners’ workflows.

Benefits of Cloud-Neutral LLM Deployment

Implementing cloud-neutral design for LLMs offers significant advantages:

-

Flexibility and Avoiding Vendor Lock-in: Organisations can choose between cloud providers or private infrastructure without re-architecting models or pipelines.

-

Cost Optimisation: Ability to move workloads to the most cost-effective platform or leverage spot/discounted instances available on different clouds without rewrite.

-

Improved Latency and Availability: Deploying LLMs closer to users on edge, on-premises, or regional clouds improves responsiveness and resilience.

-

Simplified Management: Containerization and orchestration tools standardise deployment, scaling, and monitoring regardless of underlying infrastructure.

-

Enhanced Security & Compliance: Organisations can control where data and models reside to meet regulatory requirements, using private or hybrid clouds as needed.

-

Experimentation and Innovation: Developers can test multiple versions or configurations of LLMs in parallel across environments, speeding up iteration cycles.

These benefits position cloud-neutral architectures as critical for future-proofing AI investments

Core Principles of Portable AI Architecture

Designing portable AI systems revolves around several core principles:

-

Containerization: Encapsulate AI models and dependencies into containers (e.g., Docker) to provide consistent runtime environments.

-

Orchestration with Kubernetes: Use Kubernetes to automate container deployment, scaling, and management across any cloud or on-premises cluster.

-

Abstraction Layers: Implement storage and network abstraction to decouple AI workloads from infrastructure-specific APIs.

-

Microservices Design: Decompose AI pipelines into loosely coupled services to allow independent development and deployment.

-

Infrastructure as Code: Automate infrastructure provisioning with declarative tools to reproduce environments reliably.

-

Open Standards & APIs: Open-source frameworks and APIs that ensure compatibility and avoid proprietary lock-in are preferred.

Together, these principles create a robust foundation for LLM portability.

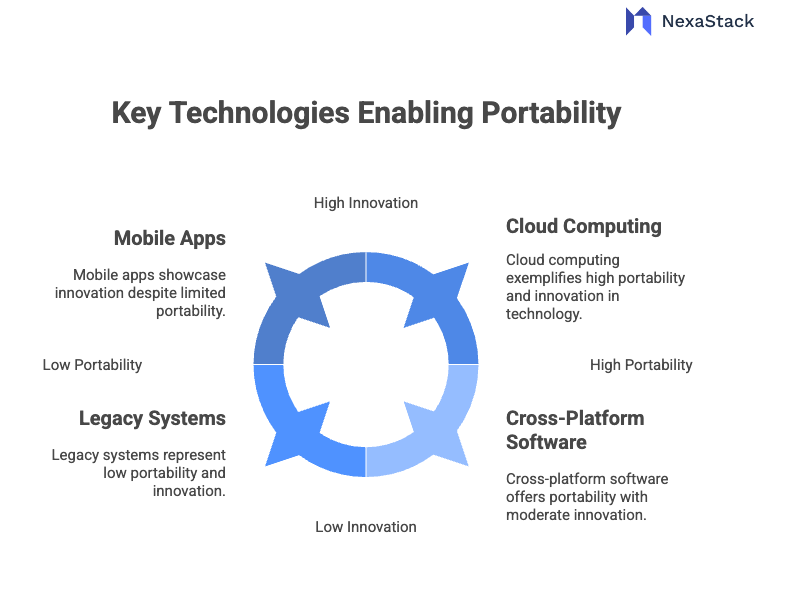

Key Technologies Enabling Portability

Fig: Technologies Enabling Cloud Neural AI

Fig: Technologies Enabling Cloud Neural AI Several emerging technologies enable cloud-neutral AI:

-

Containers & Docker: Facilitate packaging of LLM models with all required software components.

-

Kubernetes: Provides a cloud-agnostic platform for container orchestration, enabling workload portability.

-

Model Gateways/Serving Layers: Software layers like KFServing or BentoML enable standard APIs for model serving across environments.

-

Storage Abstraction: Tools that unify access to data storage (object, block, or file systems) regardless of location.

-

CI/CD Pipelines: Automate model testing, deployment, and rollback to maintain consistency across clouds.

-

Edge Computing Platforms: Enable deployment of LLMs on edge devices or local servers to reduce latency.

-

Cloud Native AI Toolkits: Frameworks like Kubeflow assist in managing AI workflows seamlessly across clouds.

These technologies collectively lower barriers to running LLMs anywhere.

Multi-Cloud vs. Cloud-Neutral: What’s the Difference?

It’s essential to distinguish multi-cloud from cloud-neutral:

-

Multi-Cloud refers to using multiple cloud providers simultaneously. While it can provide flexibility and disaster recovery, the approach may still tie applications to cloud-specific APIs and require custom integration for each environment.

-

Cloud-Neutral abstracts cloud-specific dependencies entirely, enabling applications to run unchanged on any cloud or private infrastructure.

Cloud-neutral is a broader, more future-proof strategy that enables true portability beyond having accounts on multiple clouds.

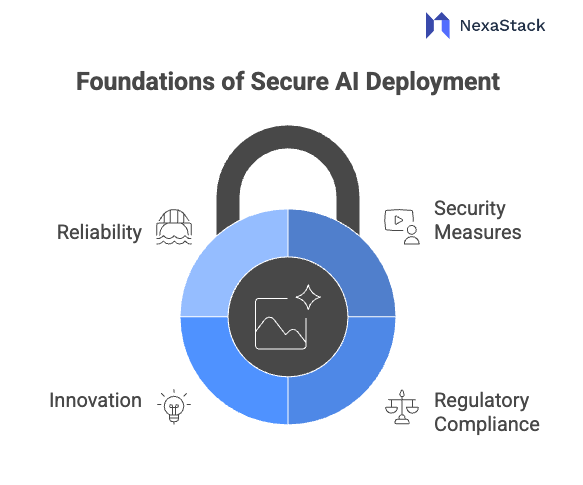

Security & Compliance in Portable AI

Security and regulatory compliance are paramount for LLM deployments:

-

Data Residency: Cloud-neutral architectures allow for selecting infrastructure that meets data sovereignty requirements.

-

Isolation: Containerization and Kubernetes namespaces provide workload isolation.

-

Encryption: Encryption in transit and at rest should be standard across platforms.

-

Access Controls: Unified identity and access management can be integrated across clouds and on-premises.

-

Audit Logging: Consistent telemetry and logging help meet compliance audits.

-

Vulnerability Management: Regular updates and scanning maintain compliance posture.

Ensuring cloud-neutral AI meets security mandates requires embedding these practices throughout the stack.

Fig: Foundation of Secure AI Deployment

Fig: Foundation of Secure AI DeploymentUse Cases: LLMs in Edge, On-Prem & Multi-Cloud

Practical scenarios show the power of portable LLMs:

-

Edge Deployments: Running LLMs on edge devices reduces latency for applications like real-time customer support, manufacturing analytics, or smart retail.

-

On-Premises for Sensitive Data: Industries like finance and healthcare deploy LLMs on-prem to comply with strict privacy laws.

-

Multi-Cloud for Availability & Cost: Distribute inference workloads dynamically to optimize costs, handle capacity spikes, or ensure availability.

-

Hybrid AI Pipelines: Train models in a public cloud but serve them at the edge or on-premises for latency-sensitive applications.

-

Disaster Recovery & Resilience: Switch workloads across clouds quickly in case of outages or geopolitical restrictions.

These examples highlight why AI portability is becoming essential.

Implementation Best Practices

To implement cloud-neutral AI effectively:

-

Start with Containerising Models: Use Docker to encapsulate models and dependencies.

-

Adopt Kubernetes Early: Standardise deployment using Kubernetes regardless of infrastructure.

-

Use Open-Source, Standardised Toolkits: Kubeflow, KFServing, MLflow, and similar tools boost portability and workflow management.

-

Automate Infrastructure and Deployment: Use Infrastructure as Code and CI/CD pipelines to reduce errors and drift.

-

Prioritise Observability: Implement monitoring and logging to track model performance and operational health across environments.

-

Enforce Security from Development: Build security and compliance checks into CI/CD and runtime.

-

Engage Cross-Functional Teams: Bring together AI/ML, cloud engineers, security, and compliance from the outset.

Iterative prototyping and testing on multiple platforms build confidence before scaling.

Conclusion: Future-Proofing LLM Deployments

As LLMs become integral to business and innovation, cloud-neutral AI design offers a strategic advantage. It frees organisations from vendor lock-in, optimises costs, enhances security compliance, and improves user experience by enabling deployment anywhere — across clouds, edge, or private data centres.

By embracing containerization, Kubernetes, open standards, and unified tooling, organisations can build portable LLM architectures that withstand changing technology landscapes and business needs. This flexibility ensures AI investments continue delivering value well into the future.

Next Steps with AI Portable

Talk to our experts about implementing compound AI system, How Industries and different departments use Agentic Workflows and Decision Intelligence to Become Decision Centric. Utilizes AI to automate and optimize IT support and operations, improving efficiency and responsiveness.