Strategic Value: Assessing the Business Impact of Generative AI

Before implementing any AI solution, businesses must assess its value. Llama 2, as well as Stable Diffusion XL, hold the potential to contribute towards increased productivity, cost advantage and better customer experience. For example, Llama 2 can involve content creation, customer service, data processing and analysis, which decrease operational expenses and permit human-related resources to focus on higher-level work. On the other hand, Stable Diffusion XL can also produce high-quality images for marketing purposes, product designs, and business simulation purposes, thus creating new income-generating activities.

The value lies in their adaptability. Businesses can fine-tune Llama 2 for specific domains, such as legal document analysis or financial forecasting, while Stable Diffusion XL can create tailored visual content for branding or training simulations. According to industry insights, companies that integrate LLMs and generative models report up to 30% efficiency gains and higher customer satisfaction rates. However, the value also depends on alignment with business goals, data availability, and innovation potential, making a thorough assessment critical.

Infrastructure Needs: Technical Requirements for Scalable Deployment

Implementing Llama 2 with NexaStack and Stable Diffusion XL requires a robust technical foundation. Key requirements include:

-

Computational Resources: Llama 2, with its billions of parameters, demands significant computing power. What N-series company needs is high-performance GPUs like NVIDIA H100 or A100 and cloud solutions like AWS, Azure, or Google Cloud. This can be made easier by employing NexaStack’s offerings such as GPU clusters for scaling, private clouds for applications in the AI clusters, and compute instances that are optimized for AI.

-

Data: Proper dataset for training, fine tuning and performing inference plays a very important role in machine learning. Companies have to maintain data governance, protection, and availability; target sources may include an organization’s databases, open databases, a feed, and IoT sensors. DataOps principles as described in guides such as those developed by XenonStack can address some of these issues, and make data pipelines reliable, guarded, and conforming to the regulatory requirements.

-

Specificity of software: The program’s implementation involves libraries like Hugging Face Transformers, PyTorch, TensorFlow, and Diffusers for Stable Diffusion XL. Developers also benefit from NexaStack, which contains a pre-built connectors for these technologies, which can save the crucial time that would be otherwise spent on integration.

-

Networking and Storage: Since the model weights, training data, and inference requests all demand immense data processing, learning and computing capability, combined with low-latency networks and elastic storage platforms, play a significant role. Regarding cost-performance and data locality, enterprises should opt to use hybrid cloud, edge computing and Object Storage.

-

Domain Knowledge Requirement: For this position, the ideal team consists of individuals with an ML/NLP background, computer vision, and DevOps specialists. A particular strength of NexaStack’s consulting services is that it is sometimes possible to hire certified consultants who can speak both the language of AI models and enterprises to create synergy with enterprises, and continuously support them.

These requirements ensure that the infrastructure can support dynamic model adaptation, allowing Llama 2 and Stable Diffusion XL to evolve with changing business demands and maintain high performance under varying workloads.

Implementation Strategy

A structured implementation strategy is key to maximizing the potential of Llama 2 and Stable Diffusion XL. The process can be broken down into several phases:

-

Pilot Projects: Begin with small-scale pilots to test specific use cases, such as generating marketing copy with Llama 2 or creating product visuals with Stable Diffusion XL. This approach minimizes risk, validates assumptions, and provides valuable insights into model performance and user acceptance.

-

Data Preparation and Integration: Clean, annotate, and integrate data into NexaStack’s ecosystem. Use tools like LlamaIndex for knowledge management and retrieval-augmented generation (RAG) to enhance Llama 2’s accuracy and relevance. Ensure data quality through validation, deduplication, and enrichment processes.

-

Model Fine-Tuning: When it comes to making Llama 2 and Stable Diffusion XL work for your specific needs, you’ll want to tweak them just right. You can customize these models for things like industry-specific tasks—think legal document analysis or creating visuals for retail—using some smart techniques. Stuff like low-rank adaptation (LoRA), quantized low-rank adaptation (QLoRA), and parameter-efficient fine-tuning (PEFT) are your go-to methods here. They’re great because they cut down on the heavy lifting your computers have to do while still keeping, or even boosting, performance. That makes them perfect if you’re working with limited resources or just want to be more efficient.

-

Deployment: Once your models are ready, it’s time to put them to work. You can roll them out on cloud platforms, keep them on your servers, or even use edge devices—depending on what matters most to you, like how fast you need responses, how secure things need to be, or what fits your budget. NexaStack’s microservice setup is a real win here. It allows you to scale up or down, handles hiccups gracefully, and ensures your operations can grow smoothly without breaking a sweat.

-

Monitoring and Feedback: But the job doesn’t stop once you deploy. You’ll want to monitor how the models check accuracy, whether they show any bias, how efficient they are, and whether users are happy with the results. Get feedback from the people who use them, your team, your customers, and whoever’s involved, and use that to fine-tune the models. This helps ensure they hit your business goals and can pivot as your needs change. It’s all about staying on top of things.

This strategy leverages NexaStack’s emphasis on agility, innovation, and user-centric design, enabling businesses to iterate quickly and adapt to new challenges while minimising downtime and maximising ROI.

Efficiency Gains: Optimizing Performance for Real-World Demands

Performance optimisation ensures Llama 2 and Stable Diffusion XL deliver consistent results. Key strategies include:

-

Model Compression: Use techniques like pruning and quantization to reduce model size without sacrificing accuracy. QLoRA, for instance, quantizes Llama 2 to 4-bit precision, making it more efficient for deployment.

-

Efficient Training: Implement parallel processing, such as tensor and pipeline parallelism, to speed up training on large datasets. NexaStack’s infrastructure supports these optimizations, reducing time-to-market.

-

Inference Acceleration: Optimise inference using grouped query attention (GQA) and paged optimisers, minimising memory usage and improving response times. For Stable Diffusion XL, dynamic batching can enhance image generation speed.

-

Continuous Evaluation: Regularly benchmark models against industry standards, such as those used in Llama 3 evaluations, to identify areas for improvement. This includes testing for coherence, relevance, and speed.

By focusing on these areas, businesses can achieve high performance even as model complexity increases.

Responsible AI: Establishing a Robust Governance Framework

AI implementation without proper governance can lead to ethical, legal, and operational risks. A robust governance framework for Llama 2 and Stable Diffusion XL should include the following:

-

Ethical Guidelines: Establish principles to prevent bias, ensure fairness, and protect privacy. For example, Llama 2’s training data should be audited for diversity and potential biases, while Stable Diffusion XL’s outputs should be reviewed for appropriateness.

-

Compliance: We must adhere to regulations like the EU’s Artificial Intelligence Act and industry-specific standards. This includes carefully considering data protection laws and intellectual property rights, particularly when generating content.

-

Accountability: Assign roles and responsibilities for model development, deployment, and monitoring. NexaStack’s governance tools can automate compliance checks and provide audit trails.

-

Safety Mechanisms: Implement safety filters, such as those used in Llama Guard, to detect and mitigate harmful outputs. Regular red team testing can identify vulnerabilities in both text and image generation.

This framework ensures that AI deployments are trustworthy, transparent, and aligned with organizational values.

Growth Strategy: A Scalable Roadmap for Enterprise AI Expansion

Every business is different, and as they expand, their AI requirements naturally shift and grow, too.

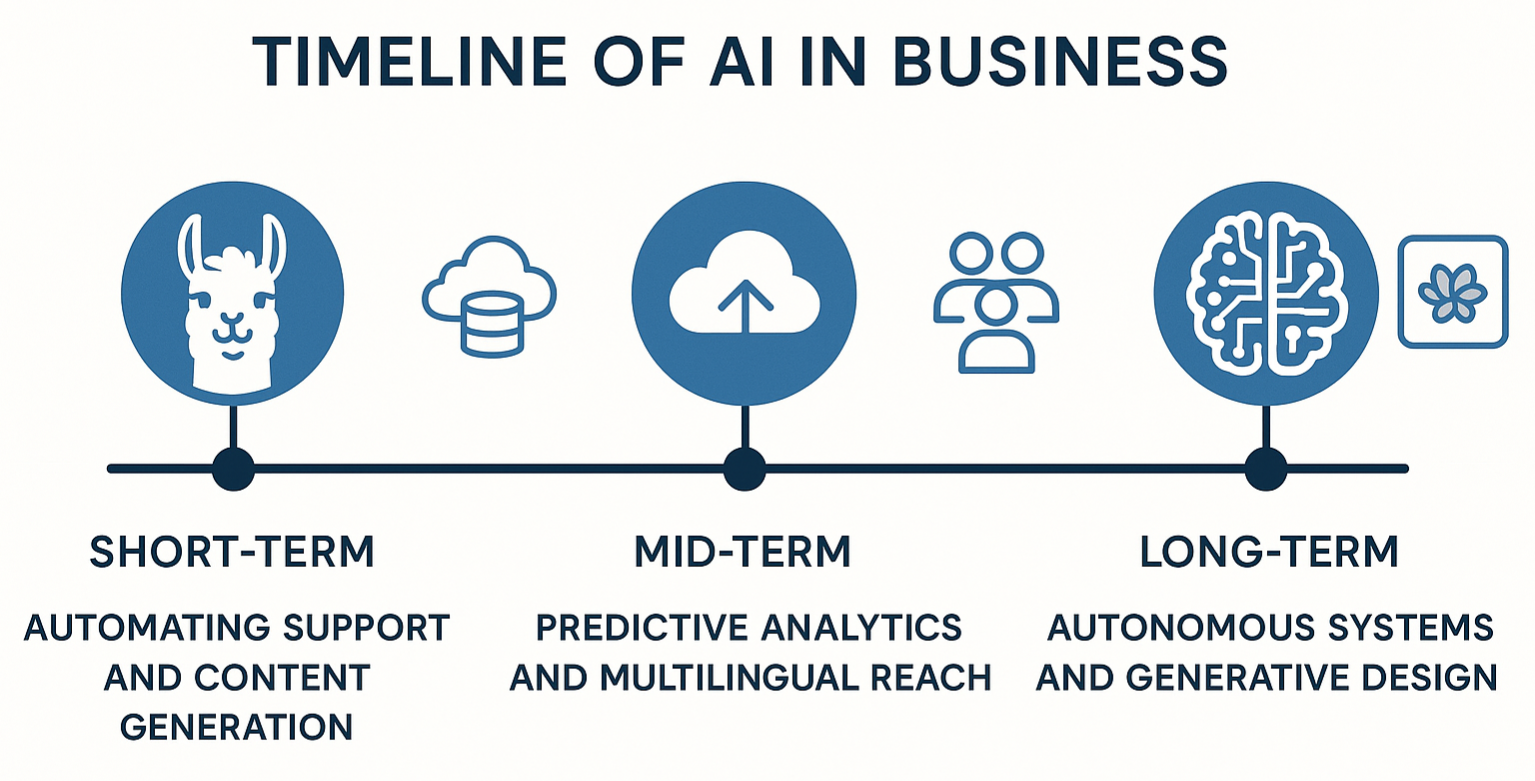

Fig 2. Roadmap for scaling AI with Llama 2 and NexaStack.

Fig 2. Roadmap for scaling AI with Llama 2 and NexaStack.

Here’s a practical roadmap for scaling Llama 2 and Stable Diffusion XL using NexaStack, broken down into manageable stages:

-

Short-Term (0-12 Months): Start with the basics. Focus on the core concepts that can make a real difference right away, like automating customer support, whipping up marketing content quickly, or creating product visuals that grab users' attention. You’ll want to tweak your infrastructure to handle what you’re working on now, set up some solid governance rules to keep things in check, and make sure your team is trained up on the best ways to use these tools. It’s about building a strong foundation.

-

Mid-Term (1-3 Years): As you get more comfortable, it’s time to think bigger. You might start exploring new areas like simulating product designs, reaching customers in different languages, or using predictive analytics to stay ahead of the game. This is also when you’ll need to upgrade your tech—more powerful computing resources, better data handling, and maybe even blending AI with human input to boost productivity. It’s all about expanding your horizons while keeping things running smoothly.

-

Long-Term (3-5 Years): Looking further ahead, you can push your boundaries with cutting-edge features like real-time interactions that combine text and visuals, systems that make decisions on their own, or designs generated by AI. You’ll also want to tap into the latest technology, such as next-gen GPUs, quantum computing, or improved AI architectures, to keep your edge and stay competitive. It’s about future-proofing your business.

This roadmap isn’t just a plan; it’s a way to grow your AI capabilities sustainably, innovating without sacrificing stability.

Real-World Impact: Case Studies Across Retail and Healthcare

Seeing is believing, right? Real-world examples show just how mighty Llama 2 and NexaStack can be. Take a big retail chain, for instance. They used Llama 2 to automate product descriptions and handle customer questions, and guess what? They slashed response times by 40% and saw a jump in sales. Pretty impressive! Then, a healthcare provider brought Stable Diffusion XL to create training visuals for medical staff. It helped them diagnose more accurately and cut training costs simultaneously. These stories aren’t just success tales—they’re proof that customizing these tools can lead to real, measurable wins, and they can inspire other industries to do the same.

Risk Management

Let’s be real—no AI project is risk-free. There are always bumps in the road, like models that start to drift, data breaches that could happen, or outcomes you didn’t see coming. But don’t worry—there are ways to handle these. Keep your models updated regularly, put strong cybersecurity in place, and have backup plans ready if something goes wrong. NexaStack’s risk assessment tools are a game-changer here—they help you spot potential issues early so you can nip them in the bud and build a more resilient system. It’s all about being prepared.

Future Trends

The AI world is buzzing with potential, and some exciting trends are on the horizon—like multimodal AI that mixes text, images, and more, federated learning that keeps data secure while still learning from it, and a bigger push toward ethical AI that prioritizes fairness and transparency. Llama 2 and Stable Diffusion XL are perfectly positioned to ride these waves, opening doors for businesses to innovate in areas like personalized medicine, self-driving tech, or immersive virtual worlds. The key? Stay in the loop and be ready to adapt. Keeping an eye on these trends and staying flexible will help you seize the opportunities as they come.

Pairing Llama 2 with NexaStack and Stable Diffusion XL isn’t just a tech upgrade—it’s a chance for businesses to really harness AI in ways that matter. By taking the time to evaluate what’s valuable, setting up the proper tech foundation, rolling out strategies that work, fine-tuning performance, keeping governance tight, and planning for growth, companies can tap into the full power of these tools. Whether you’re looking to streamline daily tasks, wow your customers, or spark new ideas, this approach sets you up to thrive in an AI-powered world. With Llama 2 and NexaStack in your corner, you’re not just meeting today’s challenges—you’re also shaping what’s next, positioning yourself for long-term success no matter how competitive things get.