As artificial intelligence (AI) continues to revolutionize industries, enterprises increasingly seek flexible and scalable solutions to deploy AI inference workloads efficiently. A cloud-agnostic AI inference platform enables organisations to seamlessly operate across hyperscaler environments, such as AWS, Azure, Google Cloud, and private cloud infrastructures. This hybrid approach mitigates vendor lock-in and optimizes performance, cost-efficiency, and compliance with data sovereignty requirements.

Hyperscalers offer unparalleled scalability and access to advanced AI tools, making them ideal for dynamic workloads and rapid deployment. However, they may not always provide the most cost-effective or secure option for every type of workload. Private cloud and colocation models offer more predictable pricing structures and enhanced control, making them attractive for enterprises with consistent computing demands and strict regulatory requirements.

By integrating the expansive capabilities of public cloud providers with the control and customization of private clouds, businesses can achieve a balanced infrastructure that supports diverse AI applications. This strategy enables organizations to leverage the strengths of each environment, ensuring that AI models are deployed where they perform best.

In this blog, we will explore the concept of a cloud-agnostic inference platform and how Nexastack’s architecture supports seamless integration across hyperscalers and private cloud environments. We’ll also examine the benefits of utilising such a platform, including how it can streamline AI deployment and optimise business outcomes.

Why Cloud-Agnostic AI Inference Matters?

-

Evolving AI Workloads

AI inference workloads are very dynamic regarding resource consumption, i.e., CPU, GPU, memory, and storage. Inference models are also typically required to be deployed in real-time or consume significant computational resources for batch processing. Cloud-agnostic platforms enable companies to leverage hyperscalers and private clouds, dynamically responding to workload demands. Nexastack's architecture solves this problem by providing intelligent scheduling of AI inference workloads across multiple cloud platforms.

With Nexastack's agent-first architecture, organisations can master AI deployment, with workloads executed optimally according to performance needs and financial considerations. Whether an organization must scale up quickly based on public cloud infrastructure or wants to keep the data within the inviolate walls of a private cloud, Nexastack provides the ability to handle and optimize both environments effortlessly.

-

Cost Efficiency and Optimization

AI workload cost management is a high priority for any business. Hyperscalers like AWS or Google Cloud are scalable in nature but have pricing structures that can lead to inordinate costs, especially during peak times. Private clouds usually involve huge capital costs initially, but provide relatively more predictable operational costs.

Nexastack helps organisations attain the perfect balance by offering intelligent cost optimisation features, maximising resource utilisation and allocation. The feature helps organisations control costs by intelligently distributing inference workloads between private clouds for deterministic workloads and hyperscalers for elastic scaling during spikes in demand. By effectively managing workloads across different environments, Nexastack enables businesses to scale their AI workloads dynamically while keeping costs in check.

-

Data Security and Compliance

Security and compliance with data are particularly critical in industries that deal with sensitive information, including government, finance, and healthcare. Although hyperscalers provide robust security, others may want sensitive data stored within their private cloud infrastructure for control. Nexastack enables organizations to run AI workloads where they are best protected—either public or private cloud—without sacrificing compliance with regulatory mandates.

Nexastack provides Private Cloud Compute, which houses all the data in the infrastructure with workload mobility in public clouds as required. It also includes Policy and Compliance as Code, enabling companies to declare compliance policies as code so that data security and regulatory compliance can be applied more easily across environments.

-

Performance and Latency Optimization

Latency is a key bottleneck for AI inference, especially in applications that have real-time or near-real-time output requirements. Hyperscalers deliver scale-level computing but are hindered by latency when models need to access data stored in distant geographical locations. Businesses can strategically place workloads across cloud platforms using Nexastack so that inference workloads are kept as near to data or end-users as possible.

With Test Time Scaling and Latent Reasoning, Nexastack boosts model performance at inference time by assigning resources in real-time to fulfill the demands of certain workloads. These all boost the inference workload performance to a very high level, especially for premium AI applications where response quality is very important.

-

Business Continuity and Avoiding Vendor Lock-In

Vendor lock-in is one of the risks of using one cloud provider, especially if this provider increases pricing, has outages in their services, or terminates essential services. Like what Nexastack offers, a cloud-agnostic platform does not leave any business dependent on a single vendor, which may lead to operational disruption. Through Nexastack, organizations can switch between hyperscalers and private clouds smoothly, maintaining business continuity and lessening the danger of vendor lock-in.

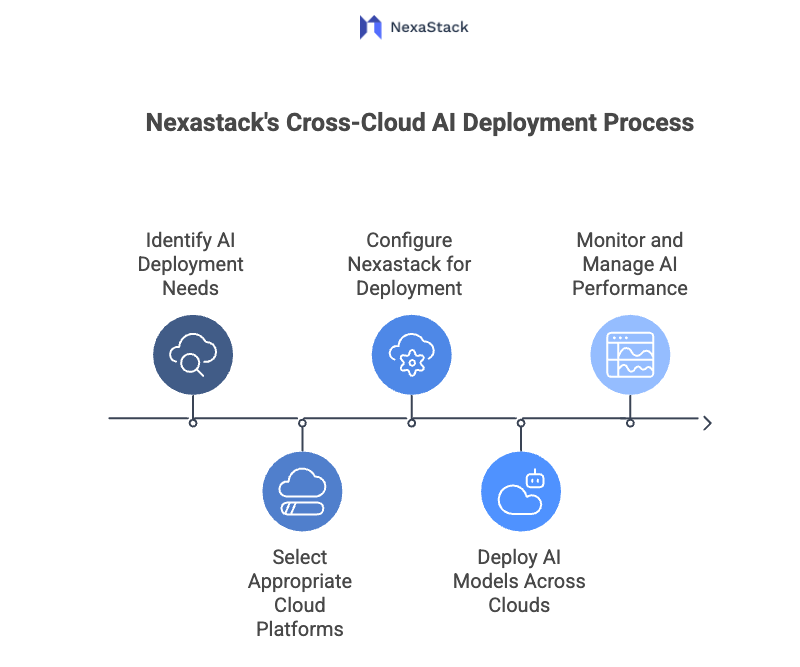

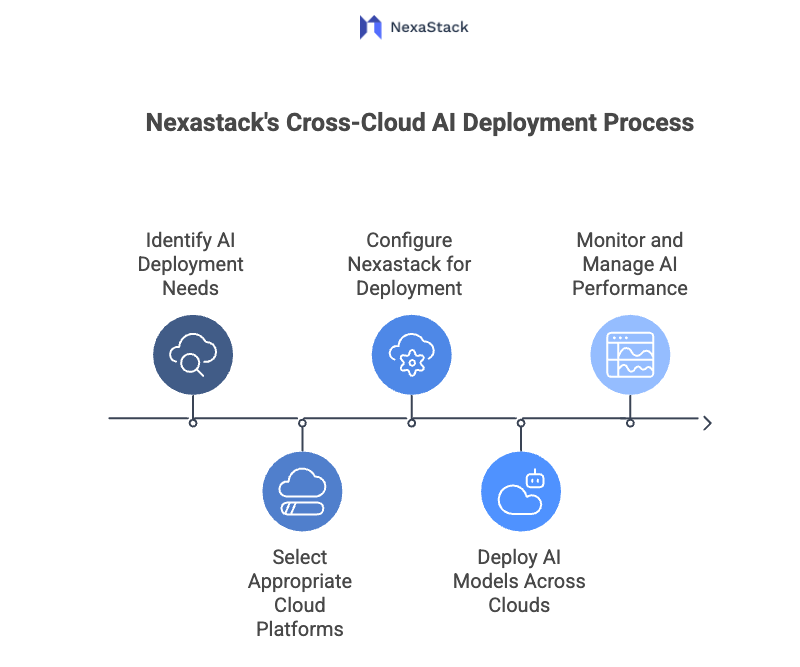

Nexastack's Role in Cross-Cloud AI Deployment

Figure 1: Cross-Cloud AI Deployment Process

Unified Architecture Across Public and Private Clouds

Nexastack is designed to operate on both public (hyperscalers) and private clouds. Agent-first architecture abstracts away the complexities of the infrastructure so that AI inference workloads can be deployed and managed seamlessly in any cloud. Whether you prefer the scalability of a public cloud or the control you have with your private cloud for security and compliance, Nexastack gives you a single interface to manage both.

With Nexastack's smart scheduling platform, organizations can define the resource needs of their AI models and allow the platform to select the optimal cloud infrastructure based on these parameters automatically. The outcome is a flexible, cloud-agnostic AI infrastructure that tailors itself to the needs of each workload.

Leveraging Private Cloud Compute for Security and Privacy

Many organizations, particularly those with regulated businesses, have some security and privacy requirements that require the use of private cloud environments. Organizations are provided with the freedom to own confidential data using Nexastack Private Cloud Compute services completely. This enables sensitive AI inference workloads to be processed in a secure on-premises environment with full infrastructure control, but it also retains flexibility in offloading other workloads to public clouds.

One of Nexastack's key differentiators is its freedom to combine public and private clouds without compromising security or performance. This makes Nexastack a first choice among organizations demanding maximum flexibility.

Seamless AI Model Deployment Across Clouds

After training AI models, Nexastack facilitates easy deployment on public and private clouds. Its Model Catalogue enables companies to leverage various pre-trained models or upload custom ones for fine-tuning. Deployment is possible using Nexastack's tools with one click to private cloud infrastructure or hyperscalers, enabling models to be rapidly transferred to production.

The deployment is not merely about putting models online—it is also about optimising those models for performance because Nexastack can support Test Time Scaling and Latent Reasoning, which resource-dynamically allocates at inference time to maximise speed and accuracy.

Comprehensive Compute Management

Nexastack offers strong compute management features for AI model tuning and optimization of inference. With its Compute feature, organizations can set up resources for AI workloads so that enough resources are reserved in the cluster. After the compute resources are validated, Nexastack installs and manages infrastructure components like JupyterHub so that teams can experiment and collaborate in a controlled manner.

Regardless of whether inference is being run in a private cloud or scaled within hyperscalers, Nexastack guarantees efficient deployment of AI models with negligible downtime and maximal resource utilization.

AI Model Observability and Monitoring

Observability is key to keeping AI models in production. Nexastack offers strong monitoring and logging features, enabling organizations to monitor the performance of their AI models in real-time. Whether running on hyperscalers or private cloud infrastructure, organizations can keep their models running at their best, constantly monitoring and automatically scaling resources based on fluctuating workload requirements.

Advantages of Nexastack's Cloud-Agnostic Inference Platform

-

Flexibility and Scalability

By leveraging Nexastack’s cloud-agnostic approach, organizations can scale their AI workloads across any cloud environment. Whether the need arises to expand during periods of high demand or scale down to control costs, Nexastack ensures that resources are allocated appropriately to meet demand.

-

Security and Compliance

Nexastack’s Private Cloud Compute capabilities ensure that organizations can comply with strict data security regulations. Whether processing sensitive data in a private cloud or using public cloud services for less-critical workloads, businesses can maintain complete control over their data and adhere to industry compliance standards.

-

Cost Optimization

By integrating features, Nexastack helps optimise the cost of running AI inference workloads across multiple clouds, allowing organisations to maximise performance while minimising resource consumption.

-

Vendor Independence

Avoiding vendor lock-in is crucial in today’s competitive landscape. With Nexastack, businesses can seamlessly migrate workloads across different cloud environments without being locked into one specific vendor, ensuring business continuity and flexibility.

Conclusion: Embracing a Cloud-Agnostic AI Strategy

If we dive, we find that the future of AI deployment is multi-cloud, and Nexastack is at the forefront with its solution of providing an architecture that allows organizations to deploy inference workloads on hyperscalers and private clouds natively. By combining the resource elasticity of the public cloud with private clouds' security and control, Nexastack offers a solid and robust solution that enables organisations to optimise performance, manage cost, and support data security compliance regulations.