Computer vision has rapidly evolved from a niche research field into one of the most transformative forces in modern enterprises. From automating quality inspection to enhancing customer analytics and improving healthcare diagnostics, Vision AI has become the foundation of next-generation business intelligence. However, while the promise is enormous, the path to production is complex.

Enterprises face the daunting challenge of scaling Vision AI workloads — training deep learning models on massive image and video datasets, orchestrating distributed compute infrastructure, and maintaining consistent performance across diverse deployment environments.

NexaStack bridges this gap. It provides a scalable, composable, and cloud-native Vision AI stack that enables organisations to build, deploy, and manage computer vision systems with unmatched efficiency and reliability. Designed for enterprise-grade use, NexaStack unifies data, model, and infrastructure management under one platform — empowering businesses to move from experimentation to large-scale production effortlessly.

Why Enterprises Need Scalable Vision AI

Enterprises today operate in a visually rich, sensor-driven environment. Manufacturing lines generate continuous inspection footage; healthcare systems process terabytes of imaging data; retail environments depend on visual analytics for customer behaviour insights; and smart cities rely on surveillance and IoT vision feeds for public safety.

The challenge lies not in generating data but in deriving intelligence from it — at scale. Traditional machine learning setups often struggle to handle the demands of high-resolution imagery, continuous data ingestion, and real-time inference requirements.

Key pain points enterprises face:

-

Data fragmentation: Visual data is scattered across edge devices, storage systems, and cloud silos.

-

Model scalability: Models trained on limited samples often fail in production when faced with diverse conditions.

-

Infrastructure bottlenecks: GPUs and storage resources are underutilised or overburdened.

-

Compliance and governance: Vision AI models frequently process sensitive or identifiable data. Ensuring compliance with GDPR, HIPAA, or SOC 2 requires built-in traceability.

A scalable Vision AI stack like NexaStack eliminates these challenges by combining automation, scalability, and security in one platform — allowing enterprises to focus on insights, not infrastructure.

Role of NexaStack in Building Robust AI Pipelines

NexaStack is designed as a Vision AI control plane — a unified orchestration layer that spans across data pipelines, model lifecycles, and compute infrastructure. Its architecture embodies the principles of MLOps, DevSecOps, and Zero-Trust AI, ensuring that Vision AI can be deployed securely and efficiently across hybrid environments.

How NexaStack strengthens AI pipelines:

-

Unified Pipeline Management: From data ingestion to model deployment, all stages are managed under one dashboard with full traceability.

-

Elastic Infrastructure: Dynamically scales GPU/TPU nodes for compute-intensive workloads.

-

Model Registry and Governance: Tracks every model version, dataset lineage, and hyperparameter configuration.

-

Continuous Delivery for AI: Automates training, validation, and deployment cycles — ensuring Vision AI systems remain accurate and up to date.

-

Integration-ready: NexaStack offers APIs and SDKs for integration with ERP, CRM, IoT, and edge systems.

By abstracting away complexity, NexaStack allows data scientists, engineers, and IT teams to collaborate seamlessly across the entire Vision AI lifecycle.

Understanding the Vision AI Stack

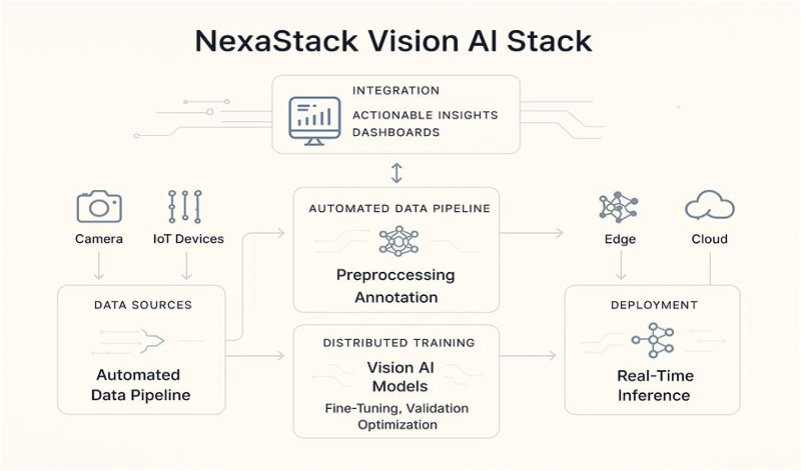

Fig 1: Nexastack Vision AI stack

A Vision AI stack represents the end-to-end ecosystem that enables image and video-based machine learning. It typically includes multiple layers working in harmony — data, models, and infrastructure.

- Data Layer

The foundation of every Vision AI system. This includes:

- Model Layer

This is where learning happens. Vision AI models — from CNNs to Vision Transformers — are trained and evaluated here. It includes:

-

Infrastructure Layer

The backbone that supports compute-intensive AI tasks. This involves:

NexaStack connects these layers seamlessly through a modular, composable AI fabric, allowing enterprises to evolve their Vision AI systems as workloads grow.

Core Components: Data, Models, and Infrastructure

Data

NexaStack’s data pipeline framework enables enterprises to ingest, preprocess, and label massive datasets without manual effort.

It supports:

-

Multi-format inputs (images, videos, LiDAR, thermal, depth maps)

-

Automatic schema inference and metadata tagging

-

Integration with AWS S3, GCP Storage, Azure Blob, and on-prem systems

AI-assisted labelling tools accelerate dataset creation using semi-supervised annotation and active learning loops — drastically reducing human workload while improving accuracy.

Models

The platform comes pre-integrated with leading frameworks:

NexaStack supports transfer learning, fine-tuning, and model distillation, enabling rapid iteration and optimisation of vision models for diverse applications — from defect detection to emotion recognition.

Infrastructure

Built on Kubernetes-native architecture, NexaStack dynamically provisions compute and storage resources based on workload demand.

Challenges in Scaling Vision AI Workloads

Scaling Vision AI isn’t just about adding more GPUs or storage. The true complexity lies in orchestrating a continuous flow of data, training, and inference, while maintaining quality and compliance.

Common challenges include:

NexaStack’s AI observability and automation engine mitigates these by enforcing consistency and transparency across the stack — from dataset lineage to deployment monitoring.

Data Pipeline for Vision AI

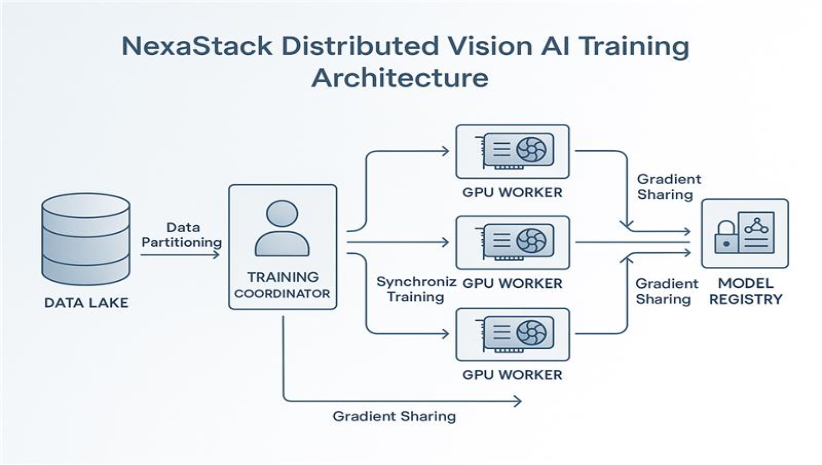

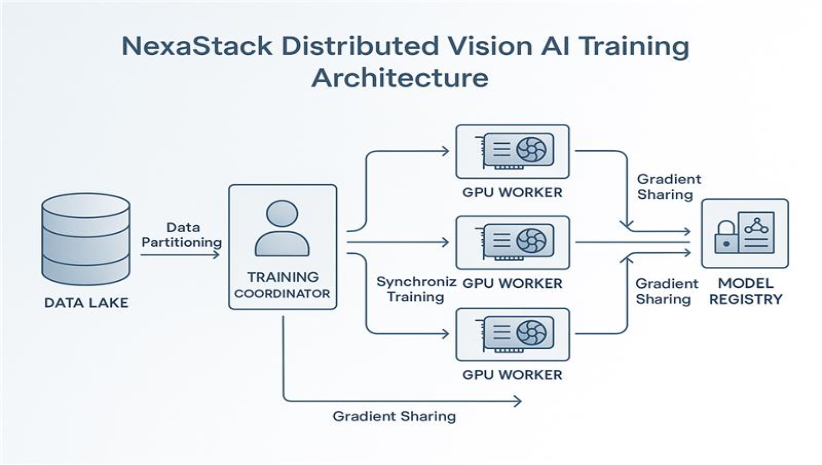

Fig 2: Nexastack Distributed Vision AI Training Architecture

Data Ingestion and Preprocessing at Scale

NexaStack enables organisations to connect data sources — cameras, sensors, drones, or cloud repositories — into a unified data pipeline. Using parallelised data ingestion and preprocessing nodes, it can handle terabytes of image data with minimal latency. The system automatically detects corrupt files, duplicates, and anomalies, ensuring high data fidelity.

Labelling and Annotation Automation

Labelling is traditionally time-consuming and error-prone. NexaStack employs AI-assisted labelling, where pre-trained models perform first-pass annotations, and humans only review uncertain cases. This hybrid loop achieves up to 70% faster labelling with higher precision.

Handling Multimodal Data Efficiently

NexaStack’s pipeline is multimodal by design. It can fuse:

This multimodal fusion creates richer contextual understanding — essential for tasks like scene recognition or predictive maintenance.

Model Development and Training

Vision Model Architectures and Frameworks

NexaStack offers a model zoo with ready-to-use architectures:

Developers can deploy pre-trained models or train custom architectures using NexaStack’s high-performance environment optimised for GPUs and TPUs.

Distributed Training with NexaStack

For enterprises dealing with petabyte-scale data, NexaStack supports distributed data parallel (DDP) and model parallel (MP) training using Kubernetes, Ray, and Horovod.

It includes:

Training runs are automatically logged in NexaStack’s Experiment Tracker, ensuring reproducibility and version control.

Continuous Improvement with Feedback Loops

Once deployed, NexaStack continuously collects inference metrics — such as confidence scores, error rates, and latency — feeding them back into the training pipeline. This closed-loop system enables auto-retraining and model promotion, ensuring models evolve with new data trends and maintain high performance.

Inference and Deployment

Real-time vs. Batch Inference Pipelines

NexaStack distinguishes between real-time and batch workloads:

The platform intelligently routes workloads based on SLA requirements.

Edge vs. Cloud Deployment Strategies

NexaStack’s hybrid deployment engine supports edge AI, cloud AI, and hybrid configurations:

Edge deployment uses lightweight container runtimes (like K3S or Docker Swarm), while cloud deployments leverage Kubernetes clusters and GPU pools for large-scale inference.

Integration with Enterprise Systems

Vision AI becomes powerful only when integrated into business workflows. NexaStack provides RESTful APIs, event streams, and webhook triggers that enable seamless integration with enterprise applications — ERP, MES, CRM, or IoT platforms.

This ensures insights generated by Vision AI can trigger automated business actions, such as halting defective production lines, alerting staff, or updating customer profiles in real time.