The global AI image-generation market is set to cross $900 million by 2030 as enterprises seek faster, cost-effective creative workflows. While managed APIS charges approximately $0.0036 per SDXL image, self-hosting Stable Diffusion can slash long-term costs, grant full data sovereignty, and unlock deep customisation, but requires robust infrastructure, governance, and scaling strategies. NexaStack AI’s Unified Inference Platform delivers Infrastructure-as-Code deployments, intelligent GPU scheduling, built-in security/governance controls, and hybrid-cloud flexibility—accelerating time-to-production for Stable Diffusion APIs from months to days.

Generative image models like Stable Diffusion can generate detailed, photorealistic images from text prompts. The model “produces unique photorealistic images from text and image prompts.” The example below (a forest treehouse scene) shows the output it can generate.

By self-hosting the Stable Diffusion API, teams can keep sensitive prompts and outputs on their systems, customise the model, and potentially reduce per-image costs compared to third-party API services.

Understanding Stable Diffusion

How Stable Diffusion Works

Stable Diffusion is a latent diffusion model that denoises lower-dimensional latent representations rather than pixels, enabling high-fidelity images with reduced compute demands. Its core comprises a frozen CLIP ViT-L/14 text encoder (123 M parameters) and an 860 M-parameter U-Net, supporting text-to-image, image-to-image, and inpainting pipelines.

Key Differences from Other AI Image Models

-

Open License: Released under Creative ML Openrail-M, it permits commercial use, fine-tuning, and redistribution, subject to responsible-use guidelines.

-

Customizability: Easily extended with ControlNet, Lora, or DreamBooth adapters to specialise in domain-specific data, unlike closed-source offerings.

Self-Hosted vs. Hosted Services: Weighing the Trade-Offs

When deciding between self-hosting and using a managed API there are clear trade-offs:

-

Control and Privacy: Self-hosting keeps all data and models in your environment. You decide how images are generated, stored, and secured. This is critical for sensitive or proprietary applications (for example, custom brand imagery or private patient data). Hosted services introduce another party into the loop, which may raise compliance or confidentiality concerns.

-

Cost: Managed APIs charge per run or per-second GPU time. By contrast, self-hosting requires up-front investment in hardware or cloud instances (CPUs, GPUs, storage) plus ongoing maintenance. A dedicated NVIDIA GPU might cost the equivalent of a few dollars per hour, which at high utilization can yield a lower cost-per-image than pay-as-you-go APIs.

-

Customization: With self-hosting, you can fine-tune or swap models freely. The open license of Stable Diffusion (Creative ML Openrail-M) lets you modify the model for specific domains and host those fine-tuned versions. Hosted platforms may limit which models or custom weights you can deploy (often at extra cost). Self-hosting removes that constraint, letting you run very large models (like SDXL with additional guidance networks).

-

Vendor Features: Managed services often include convenience features (auto-scaling, web UI, pre-built SDKs) and built-in moderation filters. For example, some services offer a serverless Inference API and fully managed Inference Endpoints. These let you deploy a model with a few clicks and handle authentication. Self-hosting doesn't have those turnkey features, so we need our API key management, monitoring, and failover solution.

Why Choose NexaStack for Stable Diffusion

NexaStack's Unified Inference Platform utilises Infrastructure-as-Code (Iac) through reusable Terraform modules and Helm charts to provision GPU clusters, networking policies, and storage configurations in a private cloud or hybrid environment. It ensures that every change is versioned and auditable before deployment.

Figure 1:Unified Inference Platform Architecture

Figure 1:Unified Inference Platform Architecture

The platform's unified CLI and Python SDK extend this automation by enabling ML engineers to script environment provisioning and model endpoint lifecycle workflows directly into existing CI/CD pipelines, eliminating manual steps and accelerating time-to-first-inference.

Self-Service Experimentation Playground

Thanks to Nexastack's composable platform for innovation labs, data scientists and ML researchers can launch playground sessions—pre-configured with Stable Diffusion kernels, GPU quotas, and data connectors—without waiting on infrastructure teams.

Each experiment is automatically tracked: prompts, model versions, hyperparameters, resource utilisation, and generated outputs are logged and versioned, enabling side-by-side comparisons and reproducibility across teams. Moreover, the intelligent scheduler provides real-time estimates of GPU hours, memory requirements, and cost implications before jobs start, helping to avoid resource waste and optimise budget allocation.

Built-In Governance & Observability

NexaStack embeds a policy-driven governance engine that enforces performance, bias, and security checks at every stage. Automated workflows ensure that only approved and fine-tuned models are promoted to production.

Role-based access controls, integrated with enterprise IAM, manage permissions for API endpoints, notebook environments, and CLI actions. At the same time, immutable audit logs record every user operation, API call, and configuration change for compliance and forensic analysis. Out-of-the-box dashboards, powered by Prometheus and Grafana, visualise real-time metrics—such as latency, throughput, GPU/CPU utilisation, and error rates—and support customizable alerts via Slack, email, or webhooks to proactively surface issues in production.

Implementation Guide

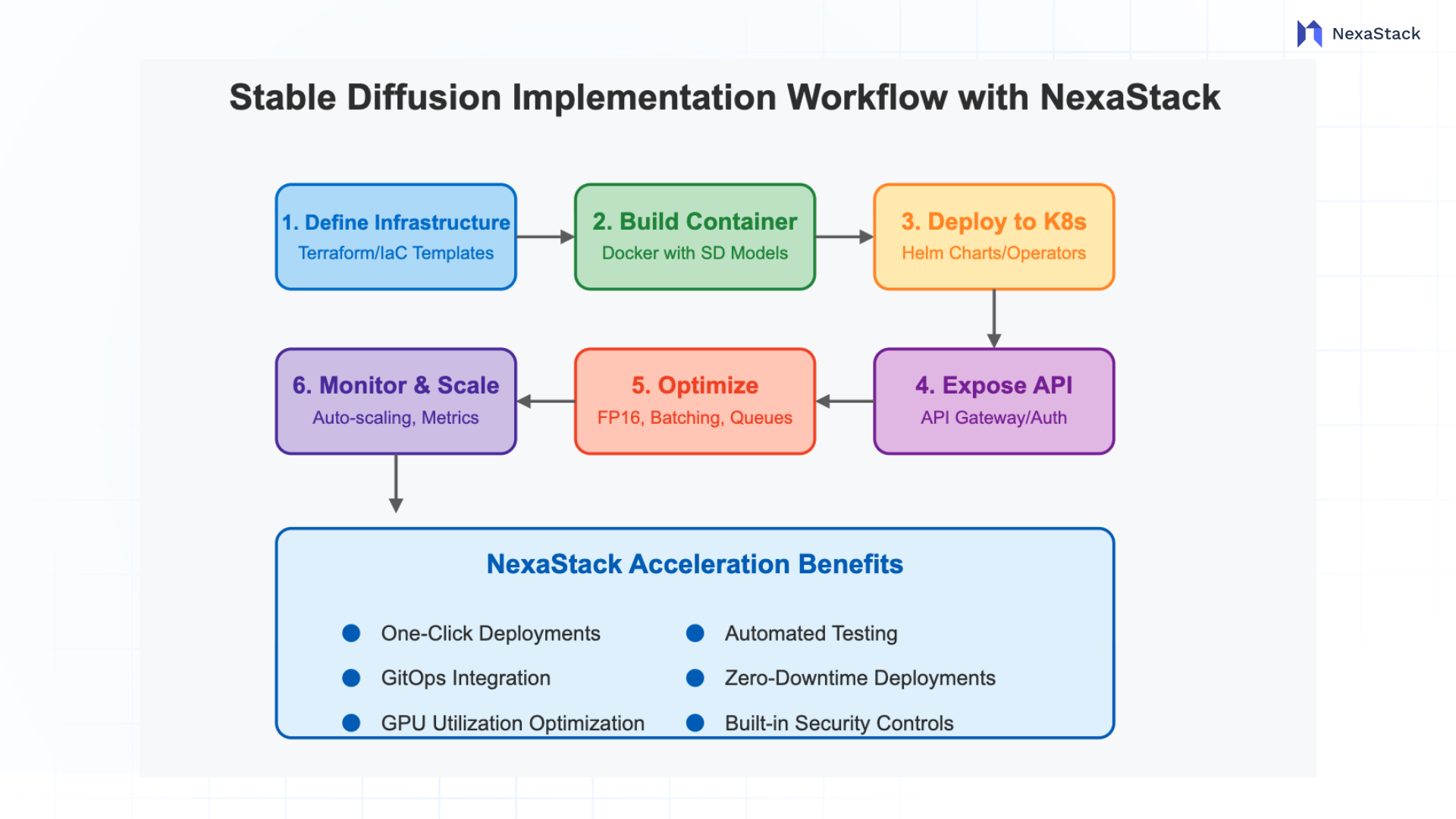

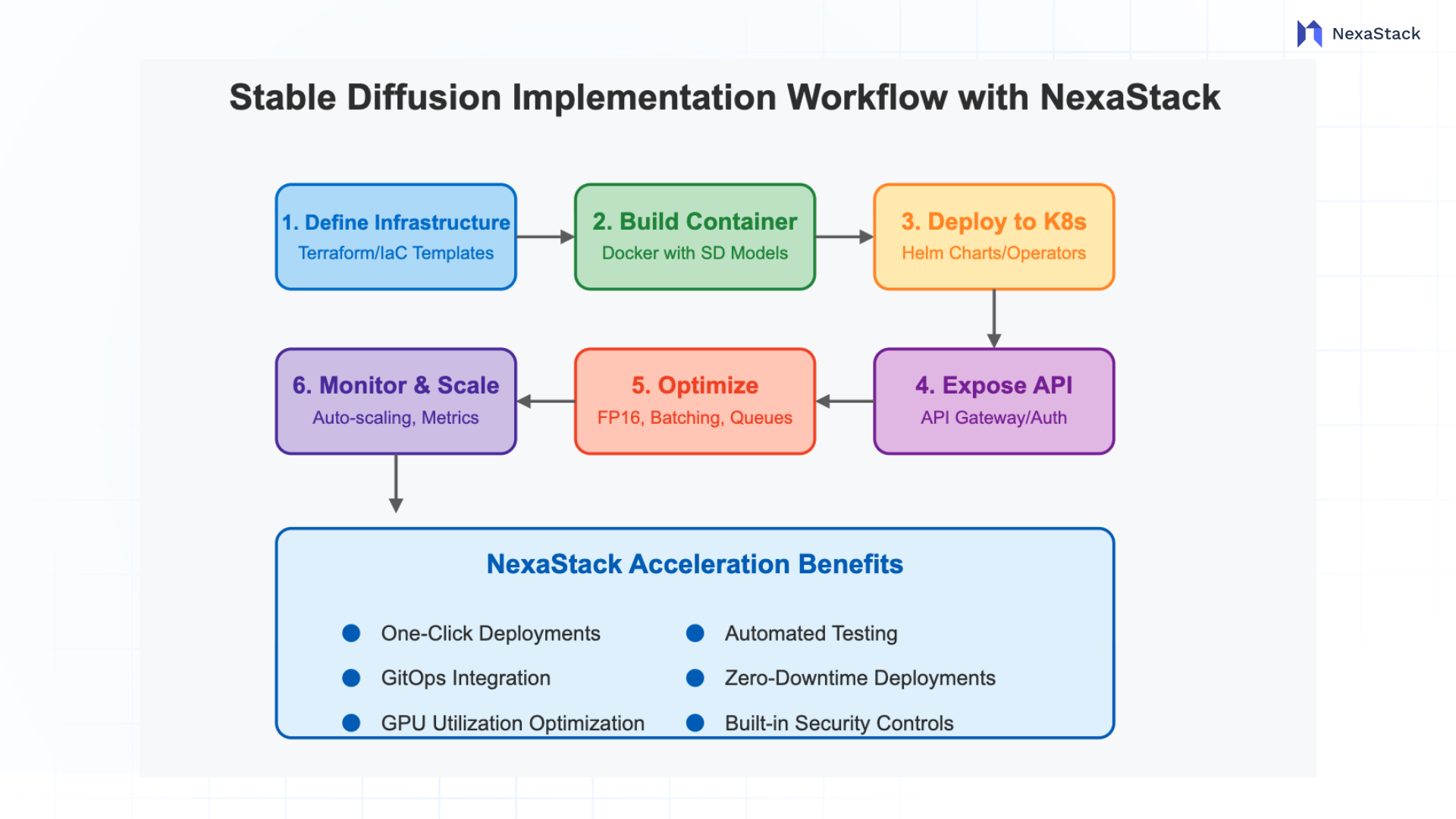

Traditional Stable Diffusion deployment requires complex technical steps, but with NexaStack, this complexity is managed for you:

-

Define Infrastructure as Code: While you'd typically have to create Terraform or CloudFormation templates manually, NexaStack provides pre-built, production-ready templates that automatically deploy optimised GPU node types, cluster networking, and storage volumes with a single command.

-

Build and Publish Container: Instead of spending weeks packaging inference code, Diffusers, CUDA libraries, and custom modules yourself, NexaStack's container registry includes pre-configured images with the latest optimisations, which you can customize through a simple configuration interface.

-

Deploy to Kubernetes: Rather than learning Helm charts or writing custom operators, NexaStack's platform automates Kubernetes deployments with GPU device plugins and auto-mounting model volumes, turning weeks of DevOps work into minutes of configuration.

-

Securely Expose the API: You must manually configure API Gateways and authentication. NexaStack handles this automatically, providing secure API endpoints with built-in API key management, IAM integration for RBAC, and configurable rate limits out of the box.

Figure 2: SD Implementation

Configuration Options for Optimization

Scaling Considerations

NexaStack's Deployment Methodology

NexaStack's Unified Inference Platform streamlines the implementation process with:

Customization Opportunities

NexaStack provides consulting on data pipeline design, continuous retraining and building custom CI/CD for model promotion. Our customisation services include:

Figure 1:Unified Inference Platform Architecture

Figure 1:Unified Inference Platform Architecture