Technical Requirements: Building the Foundation for Real-Time Inference

Moving to real-time ML inference implies defining solid technical requirements for low latency and high throughput of processed data.

Data Ingestion and Processing:

-

Streaming Data Pipelines: Technology Assets like Apache Kafka or Apache Flink will be used in Continuous Data Stream Processing.

-

Feature Engineering: Feature transformation and feature extraction give the models useful input information over time.

Model Deployment and Serving:

-

The models are served using optimised serving tools such as TensorFlow Serving or NVIDIA Triton Inference Server, which improves their performance in handling bulk requests.

-

Latency Reduction—This method of batching and asynchronous inference error, used in AI optimisation, reduces latency.

Scalability and Fault Tolerance:

-

Distributed Systems must receive design alterations for horizontal scaling that handle rising data quantities and processing requirements.

-

A fault recovery system with data replication and automatic failover is a key design for maintaining system reliability and availability.

Monitoring and Maintenance:

-

Perform continuous monitoring of real-time performance metrics, including latency, throughput, and accuracy, to quickly find and handle problems.

-

To receive fast responses, the system must log every activity and activate notification systems for essential performance indicators and system errors.

Implementation Strategy: Transitioning to Real-Time ML Inference

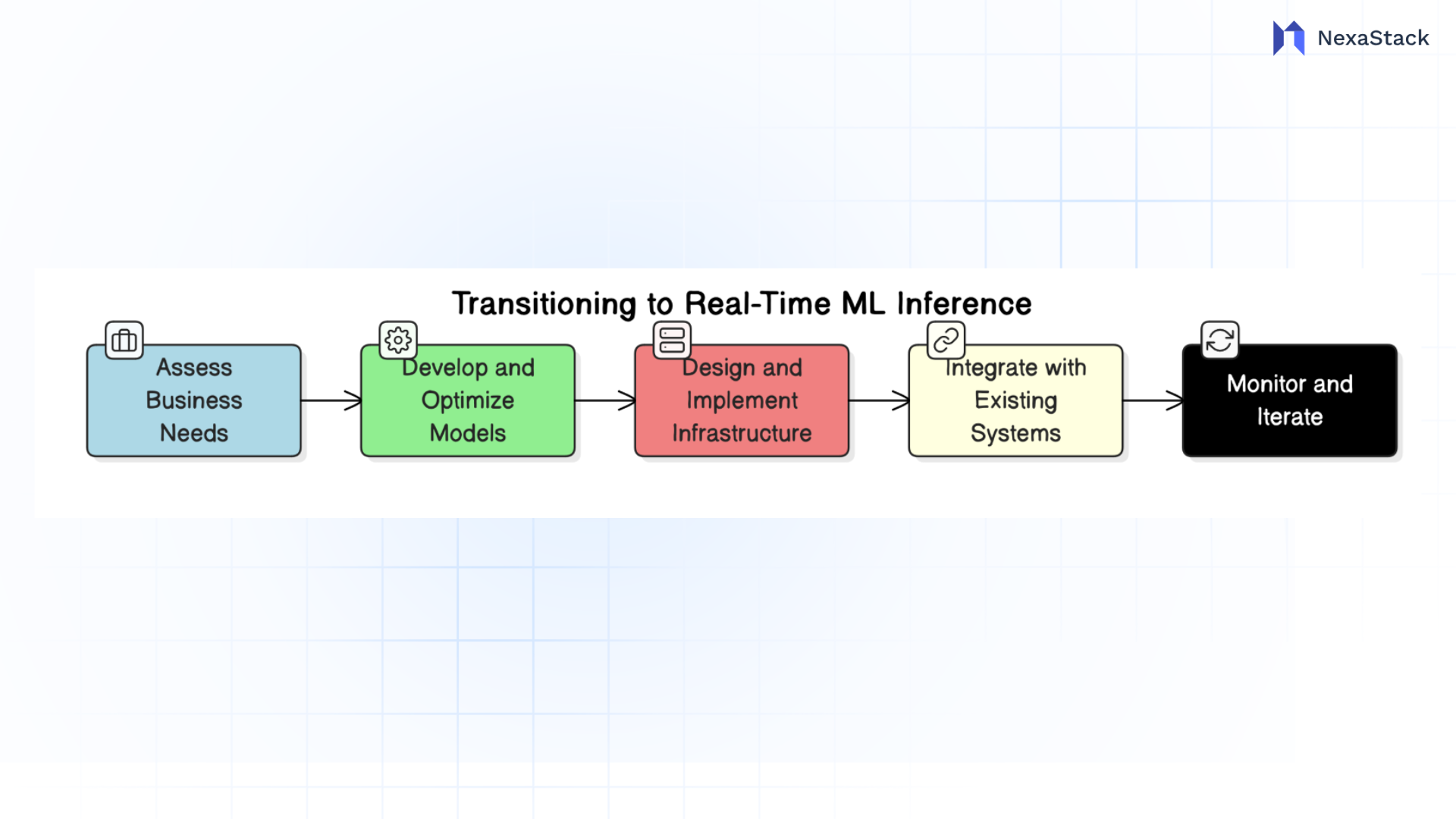

Fig 1: Real-Time ML Inference

Fig 1: Real-Time ML InferenceDeveloping a well-organised method constitutes the foundation to enable real-time inference on ML platforms.

Step 1: Assess Business Needs and Objectives

-

Choose business processes that would benefit from real-time inference by evaluating time-sensitive operation requirements and financial potential for return on investment.

-

Organisations should establish specific targets, such as minimising response times, optimising predictions, and improving user interface quality.

Step 2: Develop and Optimise Models for Real-Time Use

-

Select Appropriate Algorithms: It is essential to select models with reasonable algorithm complexity and the time it will take to perform the inference within the required latency.

-

Trim the huge models with methods such as quantisation and pruning, which help shrink model size and increase inference time with a marginal loss of accuracy.

Step 3: Design and Implement the Infrastructure

-

The establishment of data pipelines must focus on constructing pipelines that process and transmit data through a real-time operational system.

-

The deployment environment should implement Docker and Kubernetes to manage model deployment while allowing users to scale operations.

Step 4: Integrate with Existing Systems

-

Ensure the new real-time inference functions work well with present data sources, storage options, and application interface components.

-

The system must have synchronisation methods that let data processing within real-time occur alongside active batch processing.

Step 5: Monitor, Evaluate, and Iterate

-

The system requires performance tracking during continuous operation to identify improvements for model accuracy and system performance metrics.

-

Organisations should design feedback systems to obtain and implement feedback and progressively improve their models and system components.

Performance Optimisation: Ensuring Efficiency in Real-Time Inference

Meeting the real-time demands is significant, which requires enhancing performance ahead.

Model-Level Optimisation:

-

Model Compression: The quantisation process, which will decrease the model size and increase the speed of inference, is required.

-

Easy to Inference: The design models with the architectures that can be easily inferred, especially if they use a neural network.

Infrastructure Optimisation:

-

Hardware Optimisation: Use GPUS, TPUS, or other types of specific HW to perform fast computational processes and decrease latency.

-

Edge computing is a strategy of deploying their models closer to data points to reduce latency and bandwidth consumption.

Data Handling Optimisation:

- Efficient Serialisation: Use compact data formats to minimise data transmission time and the amount of time needed to parse such data.

- Parallel Processing: Parallelism is used to deal with the processing of concurrent data streams.

Scaling Framework: Architecting for Growth and Resilience

Real-time inference services are dynamic, which means they undergo loading fluctuation during certain times of the day or specific business occasions. Thus, developing a sound and adaptive architecture is crucial to maintain the ruggedness and flexibility of a system.

Key Principles of a Scalable Real-Time ML Framework

1. Horizontal Scaling

-

Design services to work and grow horizontally rather than vertically.

- Leverage usage of container orchestration systems such as Kubernetes, Kind to auto-create/write new inference pods based on usage of CPU and GPU or load of the new requests.

2. Stateless Microservices

-

Separate inference from state management, as what is in between them may depend on future development or a different project.

-

Use stateless API-based inference services so that they can be copied and shared easily and give the flexibility of load balancing.

3. Dynamic Resource Allocation

-

Serverless or function-as-a-service (FaaS) should be utilized for certain real-time models, such as AWS Lambda with GPU or Google Cloud Functions.

-

Resource schedulers will help prioritize important workloads, and in an environment with limited space, it becomes easier to move tasks to improve overall throughput.

4. Feature Store Synchronisation

-

There are many tools like Feast or Hopsworks to maintain synchronisation between the real-time and the offline feature store.

-

Real-time characteristics must exhibit low response time and show the latest state of the system.

5. Multi-Model & Multi-Tenant Serving

-

Use NVIDIA Triton, Seldon Core, and KServe to host models per node and optimise batch processing, model routing, and versioning.

6. Edge vs. Cloud Distribution

-

Distribute the models between cases on the cloud, edge, and the two in-between in a strategic way for the system, given the latency and connectivity demands.

-

Critical information should be processed in real time on the edge nodes of the Iot or for remote devices, while the rest of the computing demand may be met in the cloud.

7. High Availability and Disaster Recovery

-

Use multi-region architectures with the ability to switch to another region automatically.

-

You might use load balancers and traffic routers like Envoy or Istio to update without downtime for efficient management and a high-redundancy model architecture.

ROI Measurement: Quantifying Impact and Justifying Investment

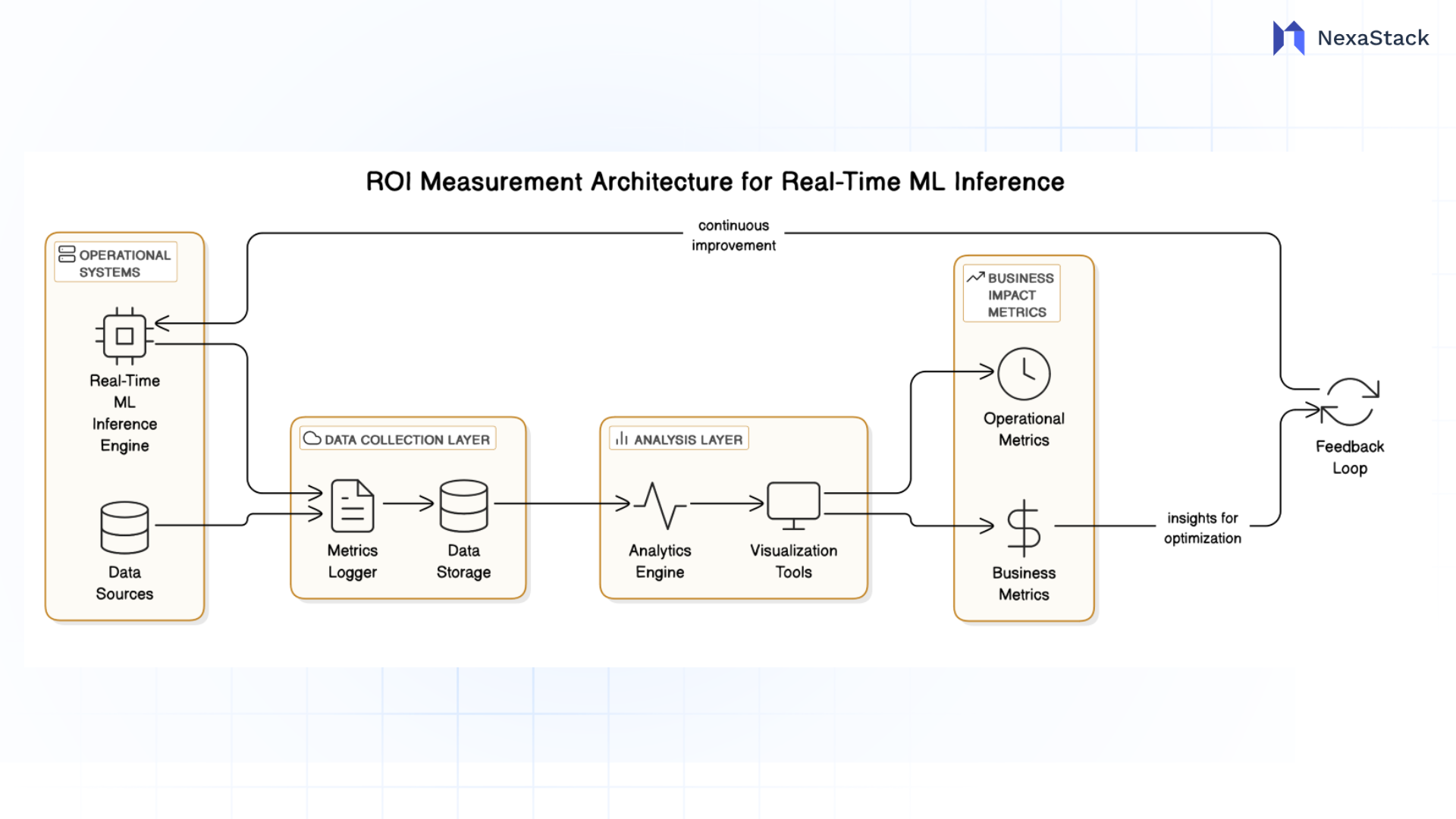

Figure 2: ROI Measurement

Figure 2: ROI Measurement Despite the fact of the probability of making real-time inference an innovative technical success, it is only at its best when it can offer tangible business value. For this reason, there is a need to ensure that an ROI systematized model is employed to justify the investment.

Operational Metrics (Efficiency & Reliability)

-

Uptime and Availability: The interval during which the designed service is wholly operational.

-

Request Throughput (RPS/QPS): The number of inferences the system can make per second.

-

Errors: Tracking of time-outs, model failures and wrong conclusions.

Business Impact Metrics

-

Improvements in sales: This is possible through up-selling, where users are advised to buy other related products they need or through segmentation that offers high-value products at a higher price.

-

Customer Experience: Better avoidance of bounce rates, enhanced interaction, positivity in NPS among customers.

-

Risk mitigation: Reduction in fraud, system breakdowns, and safety cases.

-

Reduction of costs: Low operation costs through automation of manual tasks, low SLA violations, and efficient usage of resources due to inference.

Methodologies for ROI Attribution

-

A/B Testing: Immediately assess the benefits of using batch inference as the baseline solution for deploying real-time inference.

-

Incremental Lift Modelling: Isolate the contribution of real-time inference through statistical analysis.

-

Benchmarking: Compare past performance and monitor changes in the performance of one business compared to another.

Total Cost of Ownership (TCO)

- Account for costs in

- Model development and optimisation

- Infrastructure (cloud compute, GPUS, networking)

- Maintenance and monitoring

- Weigh these against tangible and perceived advantages, such as revenues, cost savings, user acquisition, and immaterial benefits, including brand and innovation reputation.

Conclusion: Turning Real-Time ML Into Strategic Advantage

Real-time ML inference is no longer a future trend but has become one of the core components in today’s businesses. Static analytics is a dynamic intelligence, from preventing fraud before it occurs to presenting content to the user as he consumes it, to giving instructions to an automated process in real time.

ML platforms can transform and become real-time AI systems for creating business value with the help of business value assessment, engineering for technical soundness, out-of-the-box and modular architectures, performance optimisations, and designs for elastic growth with the appropriate treatments of ROIC.