Vendor Lock-in Risks

Serverless generally involves more vendor lock-in. Functions often rely on provider-specific event triggers, metadata, and managed services. Moving a Lambda or Function app to another cloud is not as straightforward as migrating a container. For example, an application using AWS Lambda + API Gateway + DynamoDB + SNS is tightly coupled to AWS primitives. Containers or VMs allow multi-cloud portability: you could move Docker images between AWS, GCP, or on-prem Kubernetes with minimal code change. Thus, companies wary of lock-in may choose dedicated servers or open-source stacks (Kubernetes, OpenFaaS) instead of proprietary functions.

That said, lock-in exists in cloud generally. Even with VMs, other services (RDS, load balancers, storage) tie you to a vendor. Some organizations mitigate risk by using containers or multi-cloud strategies. Serverless lock-in risk is higher, but often justified by the productivity gain. It’s a trade-off: innovation speed vs portability. Decisions should consider the importance of exit strategies and standardization.

Talent and Team Structure

-

Serverless: Favors full-stack and product teams. With less ops toil, smaller teams can handle infrastructure. Developers write functions and configure services (often via IaC templates). The role of traditional sysadmins shifts to platform engineers or “back-end devs” who specialize in cloud services. Teams may lean on cloud architects who know the provider’s serverless ecosystem. Skills emphasize event-driven design, distributed systems, and cloud vendor knowledge. This model reduces need for hands-on server maintenance skills, but increases demand for expertise in functions-as-a-service, API integrations, and service orchestration.

-

Dedicated Infrastructure: Requires more ops/SRE talent. Sysadmins, network engineers, and DevOps professionals manage servers, clusters, CI/CD pipelines, and monitoring. Developers may have to collaborate closely with ops to deploy code onto infrastructure. Expertise in Linux administration, virtualization, container orchestration, and networking is critical. Enterprises often have separate Dev and Ops teams; serverless encourages a DevOps culture, while dedicated models can fit both DevOps and more siloed structures.

In summary, serverless can simplify teams by offloading infrastructure work, but it also demands specialists who understand cloud service limits and best practices. Dedicated deployments allow the use of conventional IT skill sets but require more coordination.

How Businesses Use Serverless and Dedicated Infrastructure?

Major tech companies themselves use both models:

-

Netflix (AWS): Netflix runs virtually all workloads on AWS, including both dedicated instances and serverless functions. In fact, Netflix uses hundreds of thousands of EC2 instances across its infrastructure. It initially built a grand scale on dedicated VMs (for video streaming, Cassandra, etc.), but also adopted serverless for automation. Netflix’s CTO has discussed using AWS Lambda for event-driven tasks – encoding jobs, backups, instance lifecycle events – to automate operations and reduce manual errors. This hybrid approach leverages serverless for internal devops and big compute tasks, while core services (video delivery, recommendation engine) run on dedicated AWS fleets.

-

CyberArk (AWS): As a case study, CyberArk (identity security) re-engineered its developer platform to be serverless with AWS. Using Lambda, API Gateway, and DynamoDB, CyberArk slashed feature launch time from 18 weeks to 3 hours. They scaled development processes with standardized serverless blueprints, enabling daily product releases. This shows how enterprises can rapidly innovate by adopting serverless internally.

-

Cloud Providers: All major clouds offer and use serverless. AWS Lambda, Azure Functions, and Google Cloud Functions are core products. Google, for example, has pushed “Cloud Run” (serverless containers) and uses its own cloud services in Google.com and YouTube (although details are proprietary). Microsoft uses Azure Functions in the Office 365 and Teams backend. These giants also offer traditional services, such as Azure VM and Google Compute Engine. Each cloud provider evangelises serverless (fast prototyping, managed scale) while still supporting VMs for legacy workloads.

-

Enterprises: Many large enterprises adopt hybrid models. A bank might keep core banking software on dedicated servers (for strict compliance and legacy reasons) while building new customer-facing apps on serverless platforms for agility. Retailers often use serverless for spiky e-commerce events (Black Friday traffic bursts) and dedicated clusters for steady CRM or POS systems. In data processing, teams run batch jobs on serverless data pipelines (AWS Glue, Azure Synapse Serverless) alongside some on reserved clusters.

Overall, the trend is hybrid: using the right tool for each part of the business, as discussed next.

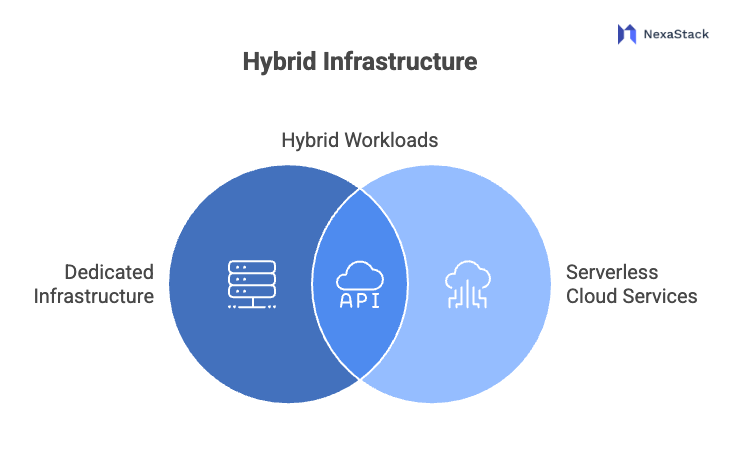

Hybrid Infrastructure: Combining Serverless and Dedicated Models

Figure 2: Hybrid Infrastructure

Figure 2: Hybrid InfrastructureA hybrid infrastructure model mixes dedicated (on-premises or VM/container-based) and serverless cloud services to leverage each’s strengths. For example, an organization may keep sensitive data on-premises, but expose APIs via a cloud gateway and implement business logic in serverless functions. Or they might run a Kubernetes cluster in one region and burst into serverless containers in the cloud when needed.

A key reason to go hybrid is flexibility. Hybrid solutions allow allocating workloads to the optimal environment. Data that must stay in-country (for regulations) can reside in private infrastructure, while global-facing microservices run serverlessly. This can improve security and compliance, since sensitive assets remain in a controlled network. It also offers cost control: predictable baseline load runs on owned hardware, and variable peak demand offloads to the cloud elastically.

Real-world benefits of hybrid include:

-

Resource optimisation: Core workloads can run on-prem in high-utilisation VMs, with overflow handled by cloud functions or containers. The cloud’s pay-per-use nature fits short bursts (e.g. end-of-month reports) without new capital expense.

-

Resilience: Hybrid can enable multi-region redundancy, such as an on-prem data centre plus a cloud DR site.

-

Innovation and legacy support: Teams can modernise parts of the stack (new serverless APIs) without fully re-architecting old systems (legacy apps keep running on VMs).

Industry surveys highlight that hybrid adopters gain “greater flexibility, scalability, cost-efficiency, security, [and] performance.” They note that hybrid clouds “allow workloads to be deployed in an optimal way.” Companies often start hybrid during cloud migration phases: some services move to the public cloud (or serverless) while others remain on-prem. Solutions like AWS Outposts, Azure Arc, and Google Anthos specifically target hybrid use cases by extending cloud control planes to on-premises hardware.

In practice, a hybrid architecture might look like: on-prem Kubernetes cluster (for persistent services), connected via VPN/Direct Connect to public cloud, where a mix of EC2 instances, Azure VMs, and serverless functions process user requests. Data flows over secure channels, and centralised DevOps tools manage deployment across both environments. Done right, hybrid yields the best of both worlds.

Choosing the Right Model: A Decision Checklist

When deciding between serverless and dedicated infrastructure, consider these key factors:

-

Workload Characteristics: Is the application event-driven or does it require always-on computing? Serverless excels at stateless, short-lived tasks (APIs, data transforms, cron jobs). Dedicated VMs/containers suit long-running, stateful, or custom environments (e.g. CPU/GPU-intensive ML jobs, legacy apps).

-

Traffic Pattern: Do you have unpredictable, spiky traffic or relatively steady load? If burstiness is high, serverless avoids over-provisioning. For constant heavy traffic, dedicated reserved instances might be more economical.

-

Performance & Latency: Are ultra-low, consistent response times critical? If yes, dedicated servers (with tuning and reserved capacity) minimize cold-start jitters. If you can tolerate occasional cold starts (or pre-warm functions), serverless trade-offs may be acceptable.

-

Development Speed and Time-to-Market: Do you need to launch quickly and iterate? Serverless generally wins here due to minimal ops work and built-in services.

-

Operational Overhead: Do you have (or want) a large DevOps team? If you prefer smaller teams and less ops management, serverless reduces maintenance burden.

-

Security/Compliance Requirements: Must data stay on-prem or in particular regions? If strict compliance dictates infrastructure (e.g. healthcare, finance), dedicated or hybrid may be necessary. Otherwise, serverless providers offer many compliance certifications out-of-the-box.

-

Cost and Budget Model: Do you have capital to invest upfront, or prefer OPEX? If you want pay-as-you-go with little initial cost, serverless is appealing. For organizations optimizing for predictable budgets, dedicated (or reserved cloud instances) offers capex/opex choices.

-

Vendor Lock-In Tolerance: Are you aiming for cloud-agnostic portability? Dedicated containers/VMs (especially using open-source orchestration) can be moved across clouds more easily than proprietary serverless functions. Weigh the productivity gains of serverless against this lock-in risk.

-

Team Skills and Culture: What is your team experienced with? Do you have cloud developers comfortable with APIs, or administrators skilled in networking and OS management? Align the choice with your available talent and training plans.

-

Hybrid/Gradual Migration Needs: Do you already have on-prem systems or contractual use of private cloud? A hybrid approach might let you migrate incrementally, using serverless for new modules while legacy systems continue on dedicated servers.

This checklist isn’t exhaustive, but covers most strategic points. Often the answer isn’t fully one or the other – many organizations adopt a mixed strategy, using serverless where it adds the most agility and dedicated resources where control or legacy support is needed. The goal is to map each workload to the model that maximizes value: performance and control versus agility and efficiency.