As AI adoption accelerates across industries, the demand for scalable, efficient, and reliable deployment infrastructure has never been higher. Kubernetes, the leading container orchestration platform, is emerging as the go-to solution for deploying AI workloads with agility and control. By abstracting the complexities of infrastructure management, Kubernetes empowers teams to focus on model development, experimentation, and inference—without being burdened by operational overhead.

AI workloads often involve resource-intensive tasks such as model training, batch inference, and real-time processing. Kubernetes simplifies these processes by enabling dynamic scaling, efficient GPU scheduling, and seamless workload orchestration across distributed environments. Whether deploying a machine learning model in a staging environment or running production-grade inference services, Kubernetes offers consistent environments, fault tolerance, and automated rollout strategies.

Furthermore, Kubernetes supports integration with popular AI/ML toolchains like Kubeflow, MLflow, Ray, and TensorFlow Serving, making it easier to build end-to-end MLOps pipelines. Teams can automate CI/CD for model training and deployment, monitor performance, and manage versioned models within a unified Kubernetes ecosystem.

Kubernetes also enhances cost efficiency and infrastructure utilisation by dynamically allocating compute resources based on workload demand. This means lower operational costs and faster iteration cycles for AI startups, enterprise data teams, and research labs.

In short, Kubernetes simplifies AI deployment by offering flexibility, scalability, and automation. It transforms complex AI infrastructure into manageable, repeatable, and resilient workflows, paving the way for operationalised AI at scale. Whether building a recommendation engine or deploying a computer vision model, Kubernetes is the foundation for modern, intelligent deployment.

Kubernetes: The AI Agent Orchestrator

What Kubernetes Is and Why It Matters for AI

Kubernetes, often called K8S, is like a symphony conductor, orchestrating containers—lightweight packages that hold your AI agent’s code, dependencies, and runtime. For AI, Kubernetes matters because it automates the messy stuff: deploying, scaling, and managing those containers across servers. Imagine running hundreds of AI agents—say, chatbots or predictive models—without worrying about server crashes or resource hogging. Kubernetes handles that, letting your team focus on building more intelligent agents.

How It Simplifies Deploying Intelligent Agents

Deploying AI agents without Kubernetes is like herding cats. Each agent needs specific resources, configurations, and monitoring. Kubernetes simplifies this by providing a centralised platform to deploy agents with a few commands. It automatically places agents on the right servers, restarts them if they crash, and ensures they’re always available. For example, an AI agent handling customer queries can be deployed in seconds, with Kubernetes ensuring it’s ready 24/7.

-

Declarative Setup: In a YAML file, define your agent’s needs (CPU, memory, etc.), and Kubernetes will implement them.

Quick Look at Kubernetes in Action for Businesses

Picture a retail company using AI agents to predict inventory needs. With Kubernetes, they deploy these agents across a cluster, scaling up during Black Friday sales and down during slow seasons. A media firm might use Azure Kubernetes AI agents to power real-time content recommendation bots, cutting costs by optimising server use. Kubernetes isn’t just tech—it’s a business enabler that drives efficiency and innovation.

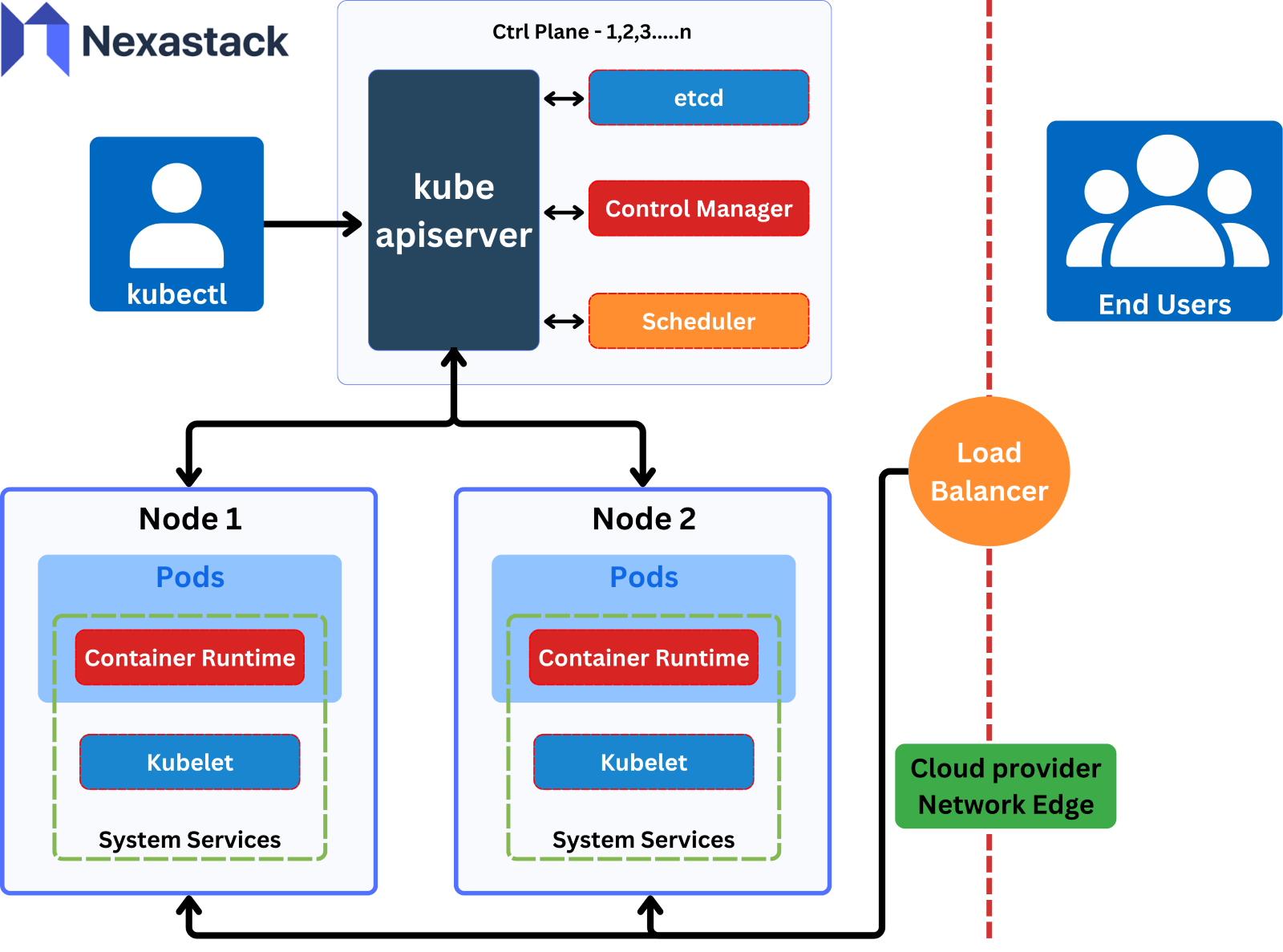

Fig: A Kubernetes cluster orchestrates containerised workloads, with the storing state of the etcd, the Control Manager and Scheduler handling pod placement, and the kube-apiserver enabling communication. Nodes (1, 2, ..., n) run pods via Container Runtime and Kubelet, managed by kubectl, integrated with cloud providers, and accessed through a load balancer by end users.

Core Benefits for AI Agent Deployment

Kubernetes reduces the time it takes to move AI agents from development to production. Its automated deployment tools let you push updates—like a new version of a fraud-detection agent—in minutes. Paired with Kubernetes, continuous integration/continuous deployment (CI/CD) pipelines mean your agents stay fresh without manual tinkering.

Ensuring Reliability for Agent Performance

AI agents must be rock-solid, especially for critical tasks like medical diagnostics or financial trading. Kubernetes ensures reliability by monitoring agent health and restarting failed containers instantly. It also distributes agents across multiple servers, so a hardware failure doesn’t bring your system down.

Cost Savings Through Resource Optimization

Running AI agents can get pricey, especially with GPU-heavy workloads. Kubernetes optimises resource use by packing agents efficiently onto servers, reducing waste. For instance, AWS EKS AI deployment can cut cloud costs by scaling resources dynamically—only using what’s needed. This translates to serious ROI for budget-conscious teams regarding AI deployment.

Setting Up Kubernetes for AI Success

To deploy AI agents on Kubernetes, you’ll need a cluster (a group of servers), a container runtime like Docker, and a cloud provider or on-prem setup. Tools like Google Kubernetes Engine AI or Azure Kubernetes AI agents simplify cluster management. For AI, ensure your cluster supports GPUs for model inference and storage solutions for training data.

Configuring Clusters for AI Workloads

AI workloads are unique—they’re compute-intensive and often need real-time data. Configure your Kubernetes cluster with dedicated node pools for GPU tasks and prioritise low-latency networking. Use namespaces to separate agent types (e.g., recommendation vs. chat agents) and set resource quotas to prevent any single agent from hogging the cluster.

Security Must-Haves for AI Agents

AI agents often handle sensitive data, like customer info or proprietary models. Kubernetes offers robust security features, but you need to use them correctly. Enable role-based access control (RBAC) to limit who can manage agents. Use network policies to restrict agent-to-agent communication, and encrypt data in transit and at rest to keep things safe.

Scaling AI Agents Effortlessly

AI agents often face unpredictable demand—like a surge in chatbot queries during a product launch. Kubernetes’ Horizontal Pod Autoscaler (HPA) automatically adds or removes agent instances based on CPU or memory usage. This ensures your agents handle spikes without crashing, keeping users happy.

Load Balancing for Seamless Performance

When multiple AI agents are running, Kubernetes distributes incoming requests evenly across them. This load balancing keeps response times low, even under heavy traffic. For example, a Kubernetes AI agent setup for real-time analytics can process thousands of requests without bottlenecks.

Handling Multi-Agent Orchestration

Complex AI systems often involve multiple agents working together—like a recommendation agent feeding data to a personalization bot. Kubernetes excels at AI orchestration, managing dependencies and communication between agents. Tools like Kubeflow can further streamline multi-agent workflows, ensuring they operate as a cohesive unit.

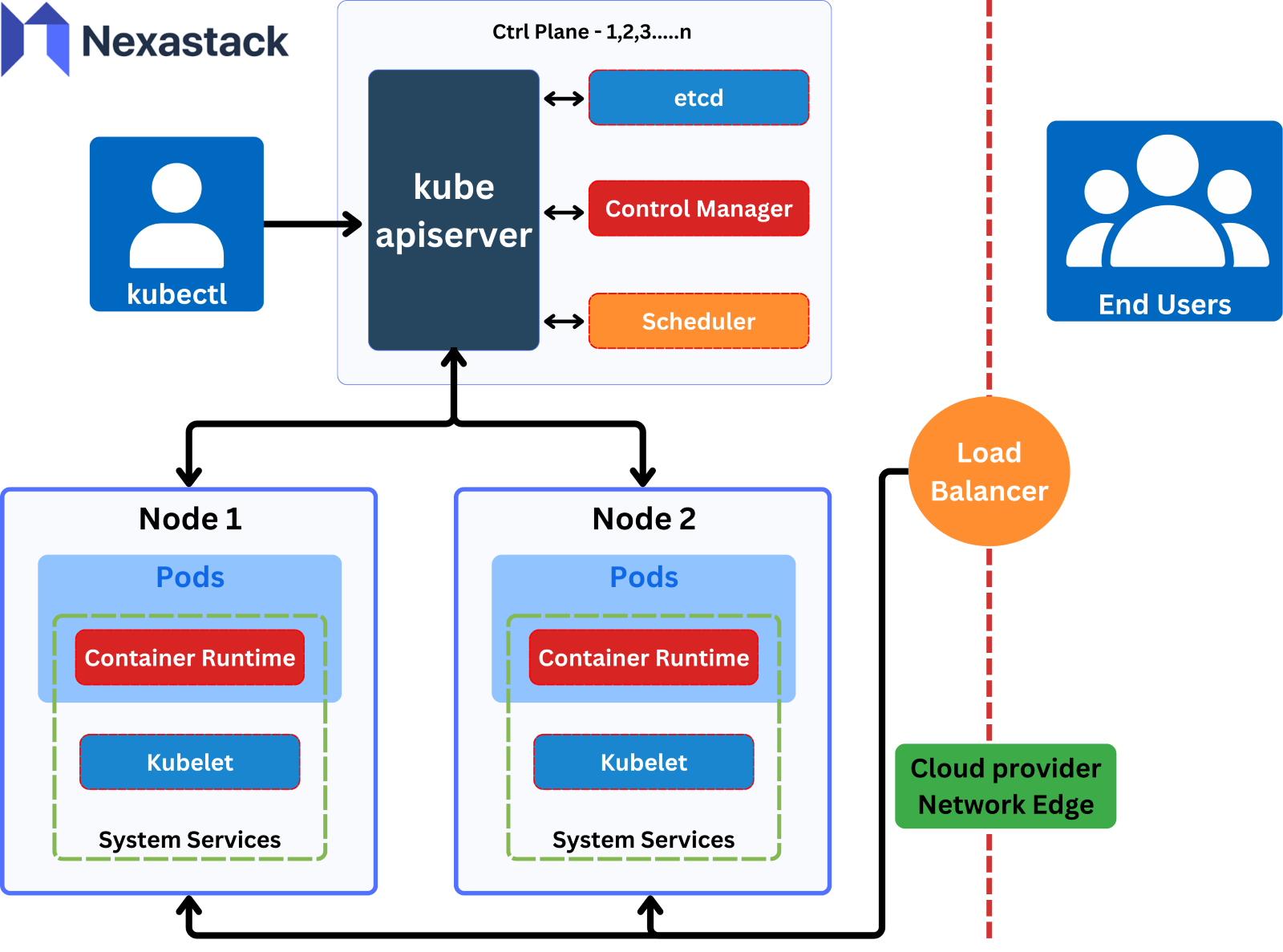

Fig: Various components required for launching your AI agent through kubernetes

Governance for AI on Kubernetes

Running AI agents on Kubernetes is powerful, but with great power comes the need to stay legit. Compliance is non-negotiable, especially when agents handle sensitive data like customer info or financial records. Kubernetes helps by offering tools to enforce policies—like role-based access control (RBAC) to limit who can tweak agents. You can also isolate workloads with namespaces, ensuring your Azure Kubernetes AI agents meet regulations like GDPR or HIPAA without breaking a sweat.

Monitoring and Managing Agent Lifecycles

AI agents aren’t “set it and forget it.” They need constant oversight to perform at their best. Kubernetes makes monitoring a breeze with tools like Prometheus, which tracks agent health—think CPU usage or response times. Managing lifecycles means controlling when agents start, update, or retire. For instance, if a chatbot agent misbehaves, Kubernetes can roll it back to a stable version in seconds, keeping users happy.

Best Practices for Version Control

Version control for AI agents is like keeping a recipe book—you must know exactly what’s in each dish. Kubernetes pairs with tools like GitOps to manage agent versions, ensuring you can deploy a new fraud-detection agent without losing the old one. Label every agent clearly and use Helm charts to bundle configurations. This way, your team avoids chaos and can roll back fast if a new version flops.

Measuring Impact and ROI

How do you know your Kubernetes AI agents are crushing it? Track metrics like latency (how fast agents respond), accuracy (are they making the right calls?), and uptime (are they always on?). For example, a recommendation agent on Google Kubernetes Engine AI might be judged by click-through rates. These numbers tell you if your agents are delivering or need a tune-up.

Calculating Cost-Benefit of Kubernetes Adoption

Kubernetes isn’t free, but its cost-benefit is a no-brainer. Compare cloud bills before and after adopting AWS EKS AI deployment—chances are, you’ll see savings from optimised resource use—factor in faster rollouts (less dev time) and fewer outages (happier customers). A retailer might find Kubernetes cuts server costs by 30% while boosting sales through smarter agents, making AI deployment ROI crystal clear.

Long-Term Value for Business Growth

Kubernetes isn’t just a tech choice; it’s a growth engine. Streamlining AI orchestration lets you launch new agents faster—think adding a predictive maintenance bot to your factory line. Over time, this agility compounds, helping you outpace competitors. Kubernetes’ portability means you’re never locked into one cloud, keeping your options open as your business scales.

Building a Roadmap for Scalable AI Innovation

To stay ahead, plan big but start small. Begin with a single agent on Kubernetes deployment, measure its impact, then expand. Your roadmap might include multi-cluster setups for global reach or experimenting with serverless Kubernetes for cost savings. Set milestones—like deploying five new agents in a year—and watch your AI innovation scale.