Context Engines with RAG & Knowledge Graphs

- Retrieval-Augmented Generation (RAG)

When an agent needs background, it doesn’t just rely on keywords. It crafts a semantic query – “show me past incidents against this IP range” or “what vulnerabilities are associated with this asset tag” – and pulls back only the most relevant snippets from the achieve or threat feeds.

- Knowledge Graphs

Relationships matter when it comes to logs. By modelling entities (users, hosts, applications) and their links (logins, data flows, trust relationships), agents can infer that jumping from a low-value workstation to a domain controller is riskier than a local file edit.

- Hybrid Lookups

Vector embeddings help surface semantically related documents (e.g., “RDP brute-force” incidents). At the same time, graph traversals reveal a chain of compromise (e.g., “user downloaded malware –> service ticket created –> lateral move to DB server”). - Explainable Insights

Instead of “alert: high severity”, the agent returns context-rich notes: “This alert matches three past incidents on the same host, involved a recently published CVE, and follows an email delivery from a flagged IP.” That level of detail lets analysts trust and act confidently.

Autonomous Actions under Human-Defined Guardrails

- Decision Proposals, Not Blind Execution

Each sub-agent publishes its recommended action, whether isolating a machine, enriching a ticket with IOC data or escalating to a manager on the centralised event bus. - Policy Engine Checkpoints

Before anything changes on the network, recommendations run through the custom ruleset: “Only isolate endpoints tagged as non-production,” “Automatically block IPS if they’ve failed five MFA attempts in 10 minutes,” or “Notify me before shutting down mail flow.” - Automated Enforcement

Once approved, actions ripple back out to EDR platforms for host quarantine, firewalls for IP blocks, or ticketing systems for work orders, without manual clicks. - Audit Trails and Rollback

Every decision, approval, and enforcement step logs a snapshot of inputs, policy evaluation, and outcomes. If an action turns out to be overly aggressive, it can be rolled back, or the guardrails can be modified for the next time. - Outcome Focus

By codifying the risk appetite in policy rules, we let agents move at machine speed for routine playbooks, while preserving analyst oversight for high-impact changes.

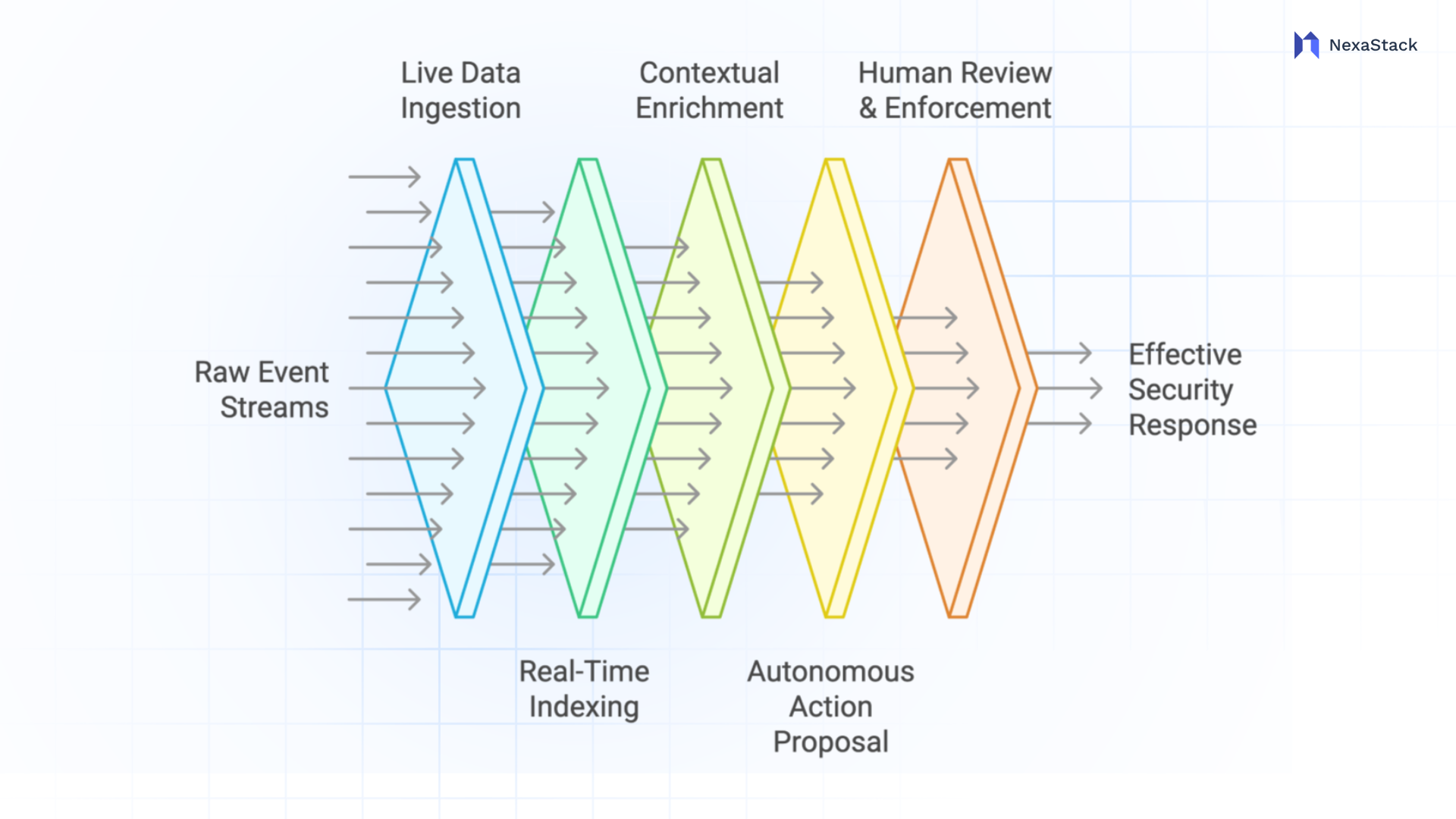

Powering Agents with Context

Building a modern, responsive SOC around autonomous agents hinges on three essential capabilities. Each pillar reinforces the others, transforming raw event streams into targeted actions and insights, without drowning the team in noise.

Figure 2: Powering Agents with Context

Figure 2: Powering Agents with Context Context Engines with Contextual Lookups & Relationship Mapping

- Rich Background on Every Alert

Raw logs alone don’t tell the whole story. Pulling in past incident notes, vulnerability scan results, and third-party threat feeds gives every alert the detail it needs, like who owns this server, which apps run on it, and whether it’s ever been a target. - Entity Relationships

Rather than evaluating each event in isolation, the system maps connections – user to device, device to network zone, zone to critical business application. Logging in to a test machine might be routine, but that same behaviour on a finance server jumps to the top of the queue. - On-Demand Historical Lookups

When a suspicious pattern emerges, agents perform quick searches against the archive. “Show me any similar events in the past six months” or “List all alerts involving this external IP” become one-click investigations. - Trusted Explanations

Instead of cryptic scores or “high/medium/low” labels, enrich each notification with a concise rationale: “This alert mirrors three past incidents tied to CVE-xxxx-xxxx on the same subnet, and the source IP appeared in last week’s threat feed.” That clarity builds confidence and speeds up the decision-making process.

Continuous Development & Adaptation – RL in Cyber Ranges, Analyst Feedback Loops, Neurosymbolic Safety

Figure 3: Continuous Development & Adaptation

Figure 3: Continuous Development & AdaptationKeeping a SOC agent sharp over time means more than just “set it and forget it.” We need a structured cycle of practice, review, and guardrails. Here’s how each piece fits together:

Simulated Attack Drills in a Cyber Range

- Realistic Replay

Instead of waiting for a real breach, we can build a mirror of our network – complete with representative logs, traffic, and system configurations – and inject attack scripts (phishing, lateral movement, data exfiltration). - Policy Tuning via Trial and Error

Agents “play” against these scenarios, trying detection rules and containment steps. A reinforcement learning (RL) framework rewards paths that catch malicious behaviour quickly and penalises noisy or overzealous responses. Over many iterations, the system learns which patterns reliably indicate compromise and which ones trigger false alarms. - Safe Environment

Because everything happens in a sandbox, missteps don’t affect production systems. We can ramp up attack intensity, explore novel tactics, and discover blind spots without risking uptime or data loss.

Analyst Feedback and Continuous Refinement

- Every Action Gets Reviewed

After a simulation or a live incident, agents present their play-by-play decisions—why they flagged an event, suggested isolating a host, etc. Analysts rate each decision (“helpful,” “too aggressive,” or “missed context”). - Closed-Loop Learning

These ratings are then feedback into the agent’s “experience log,” where they adjust confidence thresholds, reorder triage steps, or alter hunt queries. Over time, common misfires are pruned away, and high-value detections get boosted. - Empowering the Security Team

Rather than treating human input as an afterthought, the platform makes it central – so our analysts see that their expertise directly shapes future behavior. As trust grows, we can safely expand the scope of autonomous actions.

Neurosymbolic Oversight for Policy Compliance

Mixing Neural Flexibility with Rule-Based Certainty

Pure machine-learning models can adapt quickly, but sometimes drift into unpredictable territory. We guarantee key policies are never breached by pairing them with a symbolic rules engine and hard constraints written by security architects.

Guardrail Enforcement

Before any automated step executes, a lightweight rules checker verifies it against the risk model (“Never turn off production servers without multi-party approval,” “Only block IP ranges matched by three independent indicators”). If a proposed action fails these checks, it’s flagged for manual review.

Transparent Reasoning

When an action is blocked or allowed, the system logs the neural decision rationale and the symbolic rule evaluation. This dual record makes audits and post-incident forensics straightforward, keeping regulators and executives confident in our controls.

Multi-Agent Collaboration – orchestrated pipelines, blackboards, peer-to-peer dialogues

When we break a complex security workflow into focused “micro-teams” of software agents, their ability to talk to one another becomes critical. Rather than each agent working in isolation, a collaborative setup lets them divide tasks, share discoveries, and build on each other’s work, just like a well-oiled shift handoff in a human SOC, but at machine speed.

Context Management – enrichment, asset/user metadata, state/memory

Gathering logs and events is only the first step. To turn raw data into meaningful signals, we need to layer on context from multiple sources and manage it so agents (and analysts) can easily consume it. Here’s how a robust ingestion and context framework comes together:

Normalization & Filtering at Ingest

- Unified Schema

As logs stream in—from firewalls, servers, endpoints, cloud services, or mail systems—a normalisation engine translates different formats (CEF, JSON, Syslog) into a standard structure. This lets downstream processes treat every event the same way. - Noise Suppression

Early in the pipeline, we eliminate low-value chatter: routine health checks, heartbeat messages, or verbose debugging logs. By filtering out known benign patterns, we can lighten the load for both agents and analysts.

Enrichment Layers

Before an event ever hits our analytics engines or agents, enrichment services append extra fields that bring it to life:

- Asset Metadata

Each IP address or hostname is tagged with details like owner team, business function, criticality level, and geographic region. A login on a production database in London carries more weight than one on a test VM in Singapore. - User Identity & Role

Events inherit user attributes pulled from our directory: department, seniority, privileged access rights, recent password changes, and known anomalies (e.g., “this account was flagged for unusual downloads last week”). - Vulnerability Scores

By integrating with our vulnerability scanner, we know which hosts harbour unpatched CVES and how severe each one is. Any alert involving a high-severity hole immediately jumps up the priority list. - Geolocation & Network Context

IPS maps to countries, service providers, or internal network segments. Logging in from an overseas datacenter might be routine, but from a public coffee shop Wi-Fi warrants a closer look. - Threat Intelligence Feeds

Real-time feeds inject reputation data – known phishing domains, malicious IPS, compromised URLS – so events referencing these indicators carry automatic risk tags.

Challenges & Guardrails

Creating trusted automated workflows means incorporating visibility, safety checks, and respect for data rules from day one.

Explainability at Every Step

Instead of hidden black-box decisions, an agent's action should carry a concise “why” note. For instance, when the system blocks an IP, it should record the triggering pattern (e.g., “Five failed logins in 60 seconds”), include the assets involved, and link back to the raw events. This level of detail lets us trace decisions, answer questions from auditors, and fine-tune thresholds without reverse-engineering a model.

Guarding Against Malicious Inputs

Automated agents often rely on text prompts or configuration snippets. If an attacker tries to inject a crafted input like “Think fields like 'username' containing control sequences”, we risk unintended behaviour. We can defend against this by sanitising all incoming data by stripping out shell metacharacters, enforcing strict type checks, and rejecting anything that doesn’t match an expected pattern. On top of that, run adversarial tests periodically, feeding the agents slightly tweaked inputs to ensure they don’t stray outside permitted actions.

Data Governance and Compliance

When we automatically collect logs, user profiles, and threat feeds, we need clear rules about what gets stored, where, and for how long. Encrypt all sensitive information and PII in transit and at rest, tag records with retention labels like “Archived for 90 days,” and maintain an immutable audit trail of who accessed what and when. By codifying data-handling policies up front, we’ll meet requirements like GDPR’s right to be forgotten or industry-specific controls without last-minute firefighting.

The Road Ahead

Even as agentic SOCS begin to reshape security operations, several frontiers promise to make them more innovative, safer, and more collaborative.

- Federated Knowledge-Sharing

Instead of pooling raw logs into one giant repository, federated systems let organisations run joint analytics on shared indicators, like threat signatures or anonymised attack patterns, without exposing their internal data. We’re prototyping a lightweight protocol that exchanges only cryptographically protected summaries, so partners learn from each other’s experiences while keeping their environments private. - Multi-Modal Risk Signals

Today’s alerts often rely on single data types like network packets or system logs. Tomorrow, we’ll blend code commit histories, configuration changes, user clickstream patterns, and physical access logs into a unified risk score. Early trials show that coupling a recent software deployment with a jump in admin console logins can flag high-probability incidents that siloed systems miss. - Mathematical Policy Verification

Guardrails are only as strong as their enforcement. We’re exploring formal methods inspired by software model checking that let us prove certain classes of automated actions can never violate critical policies. For example, we can guarantee that an “isolate host” command always follows a successful authentication check, or that data-deletion steps require a multi-party approval signature. These proofs run offline, so we get high production speeds and iron-clad safety assurances. - Adaptive Collaboration Frameworks

As agents become more specialised, we plan to introduce dynamic team structures: “on-call” agents that handle urgent alerts, “deep-dive” experts for forensic analysis, and “maintenance” bots that tune detection rules. Based on current workload and threat priorities, a lightweight scheduler would assemble these micro-teams on the fly, ensuring the right skills converge on the correct problems.

Figure 1: SOC Tipping Point

Figure 1: SOC Tipping Point