Solution Approach

Real-Time Inspection

-

Use optimized, quantized visual models for defect classification under tight latency constraints.

-

Use multi-angle or multi-spectral imaging to improve robustness.

-

Employ adaptive lighting, reflections, and filters (as supported by NexaStack’s customizable visual pipelines).

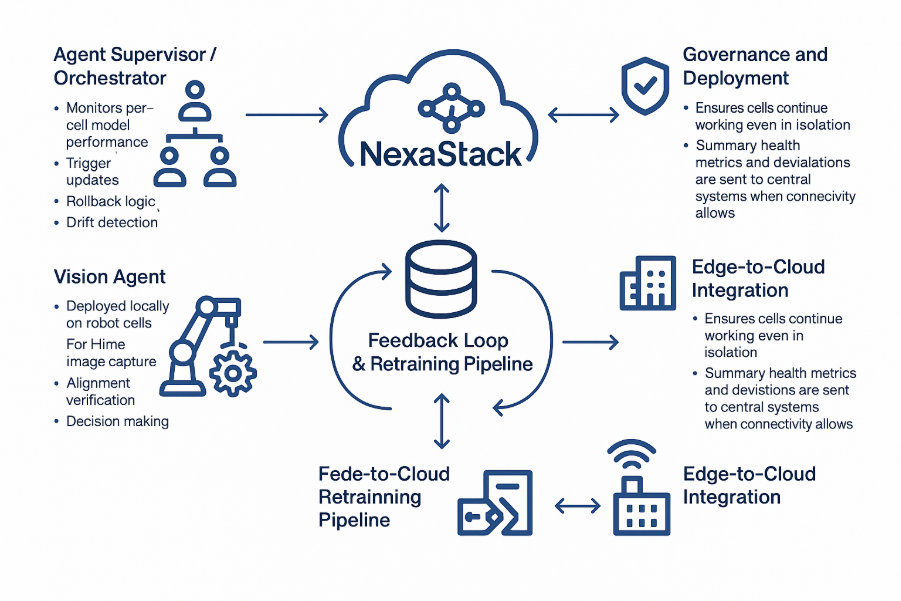

Model Deployment & Orchestration

-

Use NexaStack’s unified inference platform to deploy model versions across edge and cloud from a single pane.

-

Blue/green or canary rollout strategies to mitigate the risk of degraded models.

-

Track model drift metrics, confidence histograms, and batch performance to trigger retraining.

Feedback & Retraining Loop

-

Collect false positives / false negatives with labels.

-

Use aggregated cell-level data to retrain new versions.

-

Push updates that have been tested in controlled environments before rolling them out fleet-wide.

-

Maintain version history, rollback capabilities, and audit logs.

Integration with Robotic Controllers

-

Use standard communication protocols (e.g., OPC UA, ROS, PLC interfaces) to issue accept/reject or stop commands for the robot.

-

Control logic fallback if the vision agent is unavailable (fail-safe).

-

Synchronize inspection logic with robot states and cycle timing.

Governance, Security & Compliance

-

Role-based access control for model deployment, versioning, and agent control.

-

Immutable audit trail for model updates, decisions, and overrides.

-

Policy-as-code guards to prevent unsafe model behavior.

-

End-to-end encryption of image data, control commands, and logs.

Impact Areas

Model

-

Models become adaptive and self-improving; the rate of false positives/negatives reduces over time.

-

Dynamically tuned per product variant, lighting, or environment.

Data

-

Centralization of inspection logs, metrics, and misclassifications.

-

Cross-cell insights enable batch-level quality improvements.

Workflow

-

Robot inspection → automatic adaptive decision → feedback and retraining.

-

Significantly lowers manual QC checkpoints, accelerates throughput.

Results & Benefits (Projected / Achieved)

-

60% reduction in defect escapes (defects making it past inspection).

-

40% lower rework time/cost by catching defects early.

-

25% higher throughput by avoiding downstream rejection stalls.

-

Scalable deployment across 10+ factories with consistent policies and governance.

-

Model drift detection & rollback safeguarding quality consistency across environments.

-

Audit-ready logs & traceability align with compliance or internal quality mandates.

Lessons Learned & Best Practices

-

Begin with a pilot cell and a narrow defect class before scaling across all variants.

-

Ensure lighting consistency and calibration—vision AI suffers badly from lighting drift.

-

Plan robust fallback logic (i.e., robot continues with safe defaults) in case the visual model fails.

-

Instrument observability from day one (drift metrics, confidence, failure logs).

-

Establish a feedback loop between quality engineers and modelers to identify and curate edge cases.

-

Utilize policy-driven governance to ensure that only approved models can be deployed to production.

-

Keep a rollback strategy for each cell in case the new model underperforms.

Future Plans & Extensions

-

Self-calibration vision agents: Agents can learn lighting/angle corrections autonomously per cell.

-

Collaborative multi-robot inspection: Multiple robot arms cross-check each other’s results.

-

Digital twin simulations: Simulate “what-if” defect injection to stress-test inspection models.

-

Cross-factory benchmarking & transfer learning: Use data from mature sites to bootstrap newer ones.

-

Adaptive inspection policies: Agents may dynamically adjust inspection sensitivity based on yield goals or defect trends.

Conclusion

By leveraging NexaStack Vision AI with agentic inference, robotics integrators can turn each robot cell into an intelligent, self-monitoring visual agent. This transforms quality assurance from a passive checkpoint to an integral, scalable, and governed layer of robotic operations—the result: fewer defects, lower costs, higher throughput, and consistent quality governance across factories.

Frequently Asked Questions (FAQs)

Discover how Edge Model Management enhances Vision AI quality assurance through continuous monitoring, optimization, and autonomous performance validation across edge environments.

What is Edge Model Management in Vision AI?

Edge Model Management enables deployment, monitoring, and optimization of Vision AI models directly at the edge—closer to data sources like cameras or sensors. This ensures faster inference, lower latency, and continuous model performance validation without relying on cloud-only infrastructure.

How does Edge Model Management improve Vision AI quality assurance?

By enabling on-device validation, version control, and continuous feedback loops, Edge Model Management ensures that Vision AI systems maintain consistent accuracy and reliability, even in changing environmental or lighting conditions.

What challenges does it solve for Vision AI deployment at scale?

Edge Model Management tackles challenges like inconsistent model performance, limited bandwidth for cloud updates, and lack of visibility into edge devices. It provides centralized control for distributed AI models with automated versioning, rollback, and performance auditing.

How does it ensure compliance and security in Vision AI systems?

Edge Model Management integrates with secure CI/CD workflows and enforces governance policies such as model provenance, access control, and audit trails—ensuring Vision AI models comply with data protection and regulatory standards like GDPR and ISO 42001.

Which industries benefit most from Edge Model Management for Vision AI?

Industries such as manufacturing, robotics, automotive, and healthcare rely on Edge Model Management to validate visual AI performance in real time—powering applications like defect detection, safety monitoring, and predictive maintenance directly at the edge.