The explosive adoption of Large Language Models (LLMs) has moved them from research projects to core components of enterprise applications. However, this shift into production presents a formidable challenge: how do we ensure that these robust, non-deterministic systems operate safely, ethically, and in compliance with a growing web of regulations, including the EU AI Act, NIST AI RMF, and industry-specific guidelines?

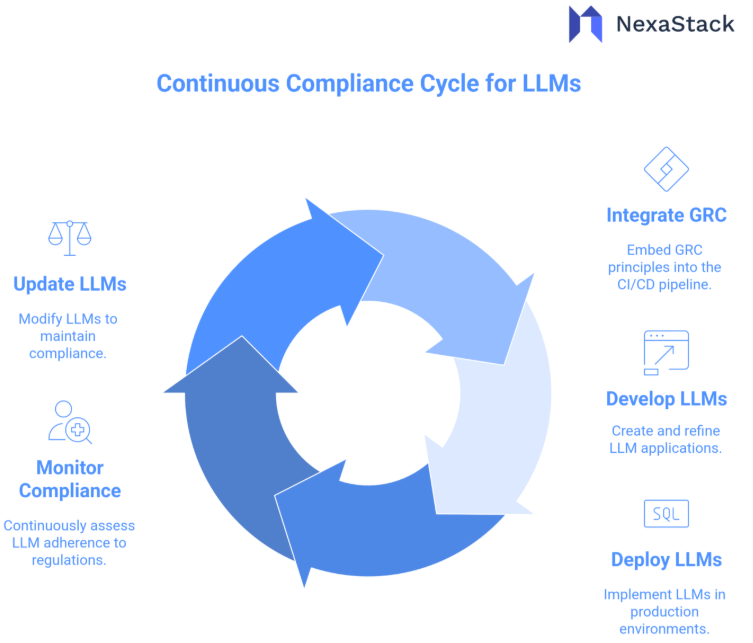

The answer lies in moving beyond one-off, pre-deployment audits and embracing a paradigm of Continuous Compliance. This means integrating Governance, Risk, and Compliance (GRC) directly into the heart of the development lifecycle—the CI/CD pipeline.

Why Point-in-Time Compliance Fails for LLMs?

Traditional software compliance often involves a manual "check-the-box" exercise before a release. For LLMs, this approach is dangerously inadequate. An LLM's behavior isn't just defined by its code; it's emergent from its training data, fine-tuning prompts, and countless user interactions. A model that passes a compliance test on Monday might generate a harmful, biased, or leaked output on Tuesday due to a novel user prompt.

The dynamic and unpredictable nature of LLMs demands a dynamic and automated compliance strategy. This is where the modern CI/CD pipeline, augmented with new tools and practices, becomes the critical control plane.

The Pillars of a Compliant LLM CI/CD Pipeline

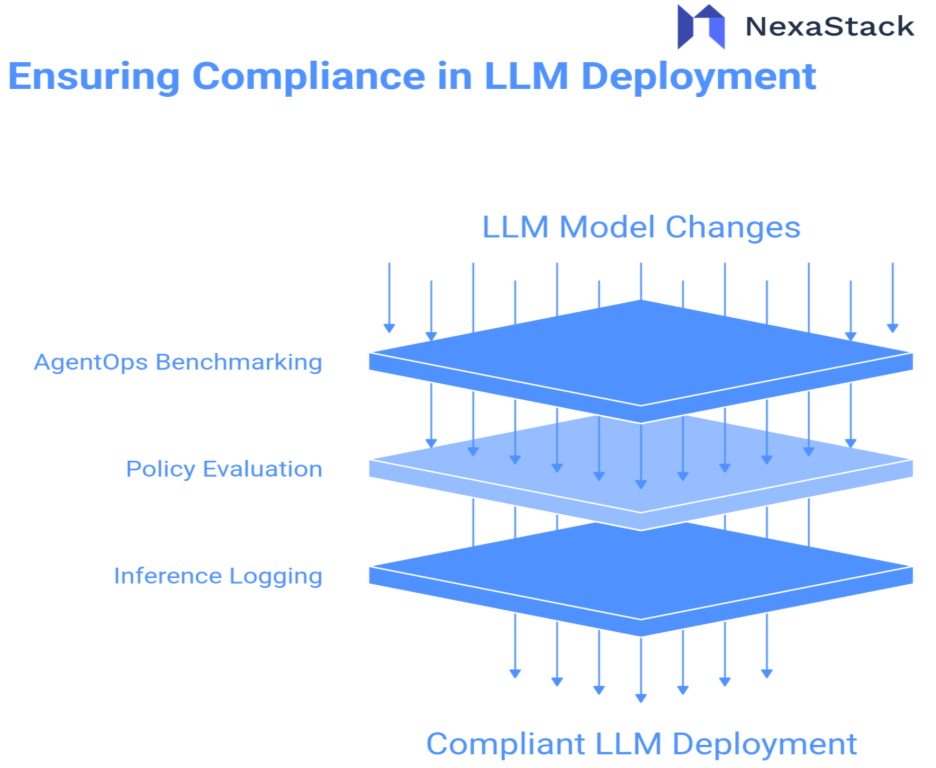

Building a pipeline for continuous compliance requires weaving three critical processes into the automated workflow: AgentOps, Policy-as-Code, and Inference Logging.

1. AgentOps: Benchmarking Before Deployment

AgentOps is an emerging discipline focused on the operational excellence of AI agents—systems where an LLM makes decisions and takes actions using tools (e.g., API calls, database queries). It provides the frameworks and tools to test and evaluate LLM performance beyond simple accuracy systematically.

How it integrates into CI/CD: Before any model or prompt change is deployed, the pipeline automatically runs a battery of evaluations against a curated benchmark dataset. This isn't just about "is the answer correct?".

-

Safety & Toxicity: Does the model generate harmful, biased, or inappropriate content when prompted with adversarial inputs?

-

Jailbreaking: How resilient is the model against prompt injection attacks designed to bypass its safety guidelines?

-

Tool Use Accuracy: For agentic systems, does the LLM correctly select the appropriate tool and provide the correct parameters?

-

Instruction Following: Does the model adhere to complex, multi-step instructions?

-

Contextual Relevance: Are the outputs grounded in the provided context, reducing hallucinations?

The GRC Benefit: These automated benchmarks act as a quality gate. A build that causes a significant regression in safety or reliability scores automatically fails, preventing non-compliant code from ever reaching the staging environment. This provides continuous assurance that the system adheres to ethical principles and risk thresholds defined by the organization.

2. Policy Deployment: Codifying Rules into Automated Gates

What it is: Policy-as-Code is the practice of translating human-readable regulations and organizational rules into machine-executable code. Instead of a 100-page PDF compliance document, you have a set of .rego (Open Policy Agent) or other policy files that can be automatically enforced.

How it integrates into CI/CD: Policies are defined and version-controlled alongside application code. The pipeline integrates a policy evaluation engine at key gates:

-

Pre-Deployment (Build Stage): The policy engine scans the code, model card, and data manifests to ensure they are compliant with the policy. Does the model use approved, licensed datasets? Are the right metadata and provenance tags present? Does it include the required disclaimer text in its system prompt?

-

Pre-Deployment (Staging Stage): After the AgentOps benchmarks run, the policy engine evaluates the results. *"Policy: The model's toxicity score must be below 0.1. Result: Score is 0.12. -> FAIL."*

-

Post-Deployment (Production): Policies can also be enforced in real-time via sidecars or proxies; however, the CI/CD integration ensures that only policy-compliant artifacts are deployed initially.

The GRC Benefit: This automates and demystifies compliance. It makes regulatory requirements tangible, testable, and transparent for developers. Auditors can review the policy code and the automated pass/fail logs, creating a clear, immutable record of due diligence.

3. Inference Logs: The Fuel for Continuous Improvement and Auditing

Inference logging is the practice of capturing and storing the inputs (prompts) and outputs (completions) of every LLM interaction in production, along with crucial metadata (such as model version, timestamps, and user ID).

While logging happens in production, it directly feeds back into the development cycle, closing the loop.

-

Logging: All production inference is logged to a secure, potentially anonymized or pseudonymized data store that complies with relevant privacy regulations.

-

Analysis: Automated scripts and monitoring tools continuously analyze these logs to detect drift, new adversarial prompt patterns, performance degradation, or emerging ethical issues.

-

Feedback Loop: These findings are used to curate new test cases for the AgentOps benchmark suite. For example, if a new type of jailbreak prompt is discovered in production logs, it is immediately added to the benchmark to prevent regression in future model updates.

-

Retraining/Updating: The logs of successful interactions can also be used to create fine-tuning datasets to improve model performance, creating a virtuous cycle. Inference logs are the ultimate audit trail. In the event of an incident, they provide full traceability to understand what happened, why it happened, and who was affected. They provide concrete evidence for auditors that the organization is actively monitoring its AI systems and using that data to improve safety and compliance continuously.

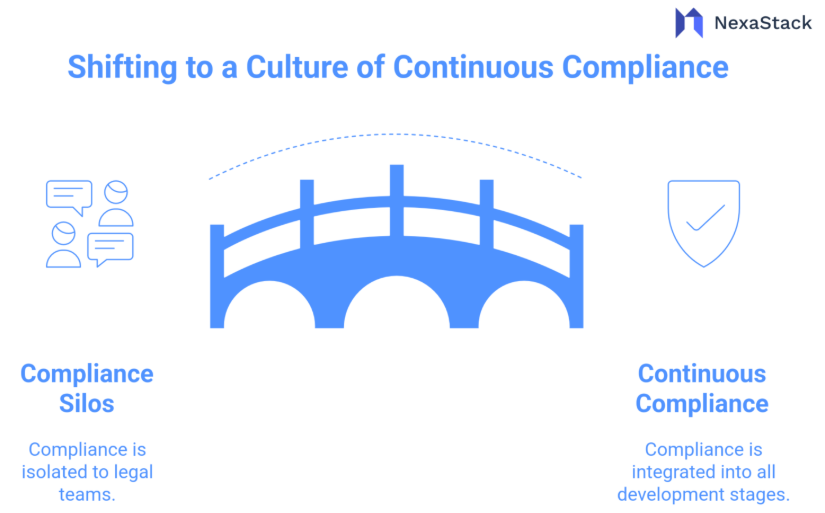

Building a Culture of Continuous Compliance

Implementing this technically is only half the battle. Success requires a cultural shift:

-

Shared Responsibility: Compliance can't be just the legal team's problem. Developers, ML engineers, and ops teams must all understand the core principles of GRC.

-

Shift-Left: Integrate compliance checks as early as possible in the development process. Identifying a vulnerability in a pull request is orders of magnitude cheaper than dealing with a public incident after launch.

-

Iterate on Policies: Regulations and best practices are constantly evolving. Your Policy-as-Code files must be living documents that are regularly reviewed and updated by a cross-functional team.

Conclusion

For enterprises leveraging LLMs, compliance is no longer a checklist but a continuous, automated process. By architecting CI/CD pipelines with AgentOps for rigorous testing, Policy-as-Code for automated enforcement, and Inference Logging for closed-loop monitoring, organizations can build and deploy AI with confidence. This approach transforms GRC from a bottleneck into a powerful enabler of responsible innovation, allowing businesses to harness the power of LLMs at scale without sacrificing safety, ethics, or regulatory adherence. The future of AI governance is not manual; it is automated, continuous, and integrated.